Re-thinking Machine Translation Post-Editing Guidelines

Celia Rico Pérez, Universidad Complutense de Madrid

ABSTRACT

Machine Translation Post-Editing (MTPE) is a challenging task. It

frequently creates tension between what the industry expects in terms of

quality and what translators are willing to deliver as an end product.

Conventional approaches to MTPE take as a point of departure the

distinction between light and full MPTE, but the division gets blurred

when implemented in an actual MTPE project where translators find

difficulties in differentiating between essential and preferential

changes. At the time MTPE guidelines were designed, the role of the

human translator in the MT process was perceived as ancillary, a view

inherited from the first days of MT research aiming at the so-called

Fully Automatic High Quality Machine Translation (FAHQMT). My

proposal challenges the traditional division of MTPE levels and presents

a new way of looking at MTPE guidelines. In view of the latest

developments in neural machine translation and the higher quality level

of its output, it is my contention that the traditional division of MTPE

levels is no longer valid. In this contribution I advance a proposal for

redefining MTPE guidelines in the framework of an ecosystem specifically

designed for this purpose.

KEYWORDS

Post-editing guidelines, machine translation, machine translation

quality, MTPE.

1. Introduction

Defined as “the process of improving a machine‐generated translation

with a minimum of manual labor” (Massardo et al. 2016: 14),

Machine Translation Post-Editing (MTPE) frequently creates a tension

between what the industry expects in terms of quality and what

translators are willing to deliver as an end

product. In this respect, one of the crucial aspects in an MTPE project

is to decide on guidelines to be followed.

Guidelines for MTPE were first advanced by Allen in what has now

become a seminal contribution (Allen 2003). Two different post-editing

levels were defined then, according to the final use of the translated

text: light post-editing for inbound texts (i.e. those that are not to

be published), and full post-editing for outbound texts (i.e. those

bound to wider dissemination). For the first type, light post-editing

involved minimal intervention from the translator, while for the second,

full post-editing aimed at producing human-quality output. Conventional

approaches to MTPE take as a point of departure this distinction (Nitzke

and Hansen-Schirra 2021) but the division gets blurred when implemented

in an actual MTPE project where translators find difficulties in

differentiating between essential and preferential changes and engage in

full post-editing (O’Brien 2011a: 19). As a result, the dissociation

between levels of MTPE seems irrelevant. Somehow this division between

full and light MTPE was motivated at a time when MT was almost

exclusively used for translating large volumes of technical

documentation — as is the case in the automotive and aerospace

industries — where the choice between MT for gisting purposes or

publication was relevant. At the time these guidelines were designed,

the role of the human translator in the MT process was perceived as

ancillary, a view inherited from the first days of MT research aiming at

the so-called Fully Automatic High Quality Machine Translation

(FAHQMT), and MTPE was conceived as an “undesirable final step in MT

development” (Vieira 2019: 319).

The paradigm shift experienced by MT in recent years with the advent

of neural machine translation (NMT) calls for a different approach to

MTPE. With MT engines leaving the research labs and opening up to

broader and generalised practice — contrasting with previous

implementation in highly specialised technical contexts — MT is now a

real alternative to human translation even in commercial contexts where

it was not used just a decade ago. In this context, when

MT is broadly used for almost any purpose it is only natural that MTPE

strategies should evolve in line with the technology.

This paper presents a proposal for redefining MTPE guidelines. In the

sections that follow, I first argue why defining MTPE guidelines

constitutes a challenge and why the two traditional categories of light

versus full MTPE are no longer valid. After this discussion, I review

four main aspects in MTPE that contribute to creating tension in the

process and directly affect the way MTPE is approached. These refer to

the following: a) translators’ expectations towards MTPE guidelines; b)

the blurred nature of MTPE, moving in a certain terminological

instability between revision and translation; c) the difficulty in

determining quality levels in MTPE and the associated concept of “fit

for purpose translation” (Bowker 2020, Way 2018); and d) how the types

of errors produced in NMT output directly affect the way MTPE is

performed. The central part of the article rests in section four. I

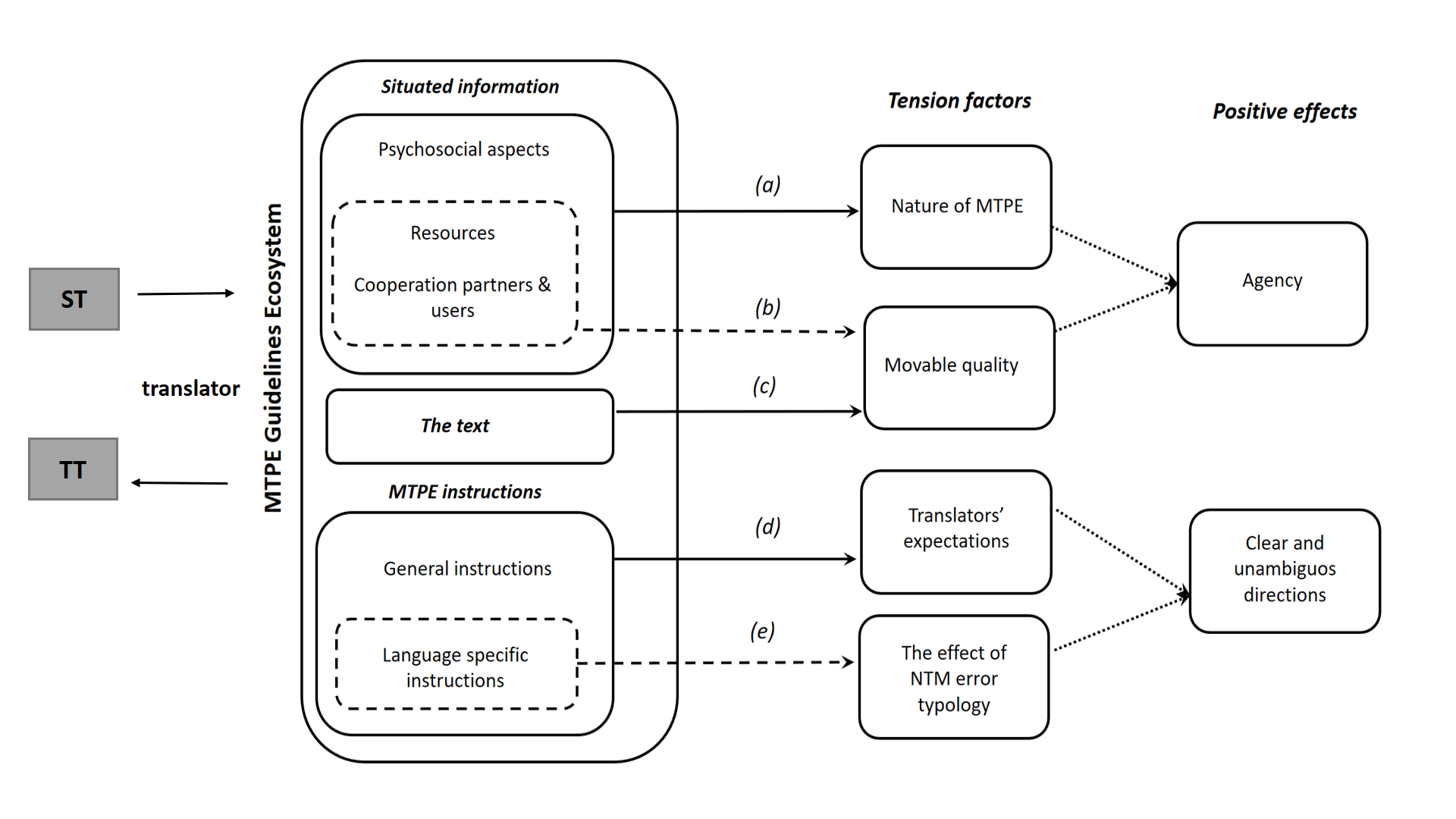

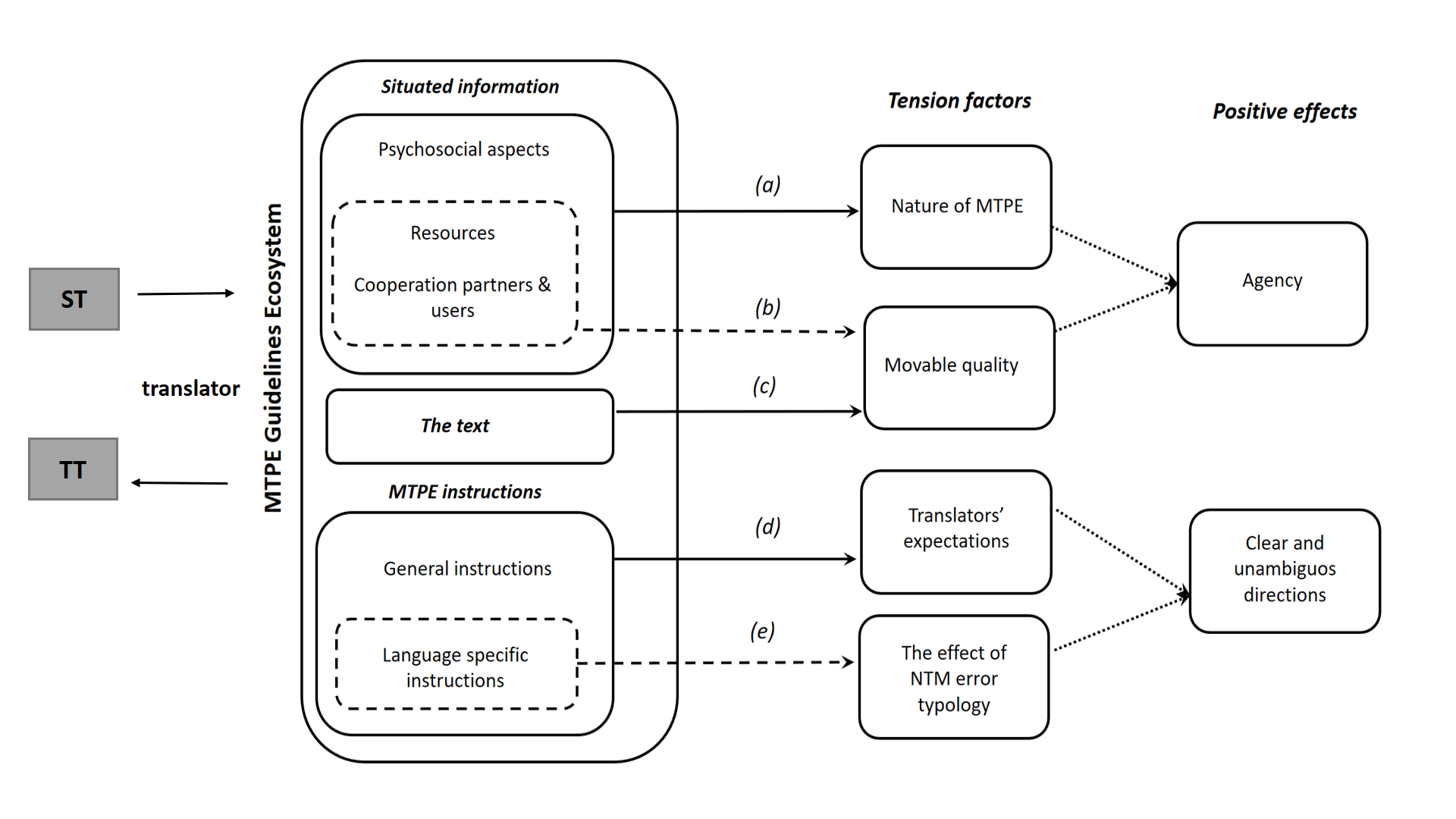

first present the MTPE guidelines ecosystem, which is based on three key

elements: situated information, the text to be post-edited, and MTPE

instructions. This is followed by a consideration of how these elements

in the ecosystem contribute to easing MTPE tension factors, fostering

translators’ agency in the process and contributing to creating clear

and unambiguous MTPE directions.

2. The challenge in

defining MTPE guidelines

Following guidelines is a prerequisite for adequately conducting

MTPE, but deciding on MTPE guidelines is not an easy task. When it comes

to establishing criteria to be implemented in a real scenario with a

decisive impact on costs, turnaround time and quality, directions

provided by the relevant literature on the subject seem somewhat

insufficient. MTPE specifications are either general recommendations

that need further development, or rules specifically tailored for a

particular MTPE project, which are difficult to replicate across

different scenarios.

The authoritative reference to MTPE guidelines is ISO 18587:2017,

where the process is described as “a more complex form of work than

revision of human translation” (do Carmo 2020: 41). The standard

identifies eight requirements for full MPTE that aim at producing an

output indistinguishable from human translation, using as much MT output

as possible, a direction that is also indicated for light MPTE. In

drawing up MTPE guidelines, the typical approach is to proceed

considering a series of aspects such as type of MT engine, description

of source text, client’s expectations, volume of documentation to be

processed, turnaround time, errors to be corrected, document life

expectancy, and use of the final text (Allen 2003; Guerberof-Arenas

2013; O’Brien 2011a). From then on, a distinction is made into

rapid, partial or full post-editing, with

expectations on translation use playing a key role in the definition of

correction strategies. Hence, an inbound translation approach

would lead either to MT with no post-editing (when texts are used for

information browsing) or rapid MTPE (for perishable texts). On the other

hand, an outbound translation approach would compel partial or

even full MTPE, depending on the quality of the translated output and

the final use of the text. Actual implementations of these principles,

both in the translation industry and in experimental settings for

research purposes, usually take MTPE guidelines for granted and only

mention them in passing (see, for instance, Carl et al. 2011;

Koglin and Cuhna 2019; Koponen 2016; Lacruz and Shreve 2014; Sakamoto

and Yamada 2020).

In a thorough analysis of MTPE guidelines, Hu and Cadwell (2016)

reveal that even if the division between full and light is considered a

standard, different organizations implement them differently, tailoring

them according to their internal requirements. The authors even find

some overlaps, especially in the description of light MTPE, while for

full MTPE the main differences lie in the specification of style and the

expected quality of the target text, depending on the use and text type

(Hu and Cadwell 2016: 351). In the academic context, experiments towards

testing MTPE guidelines are scarce. Flanagan and Paulsen (2014) examine

how three MA translation trainees interpret MTPE guidelines using TAUS

(2010) criteria and report that trainees have difficulties interpreting

the guidelines, sometimes even causing frustration during the task

(Flanagan and Paulsen 2014: 271). This is primarily due to trainee

competency gaps, but also to the wording of the guidelines which, at

some point, introduced conflicts in the way MTPE was to be performed. On

a similar note, Koponen and Salmi (2017) report how different student

translators also appear to interpret the task differently due to

difficulties in interpreting guidelines. The authors involve five

participants in a pilot study with the aim of analysing the edits made

in an English-Finnish post-editing task. Guidelines play an essential

role in the study and, in some cases, their interpretation is not

straightforward, especially when determining the necessity of edits

(Koponen and Salmi 2017: 145). The authors suggest that a possible way

of mitigating this drawback is by providing more detailed information

and training regarding necessary and unnecessary edits, depending on

particular language combinations (Koponen and Salmi 2017: 144-145).

Even if these experiments are limited in scope as the number of

participants is rather small, they are in line with findings in real

industry settings. In this context, the subject of guidelines is also

scarcely investigated, but still, the work of Nunziatini and Marg (2020)

is revealing in this respect. The authors present an interesting example

of how MTPE guidelines are specifically tailored to the needs of an

LSP when the traditional division

between full and light MTPE is considered “too abstract and inflexible

both for translation buyers and linguists” (Nunziatini and Marg 2020:

1). The problem is, as the authors indicate, that there are grey areas

not covered by this division and that clients are not really familiar

with different MTPE levels. Translators, on their part, are often not

entirely sure which approach would meet clients’ expectations. The

solution implemented involves aligning all stakeholders on what types of

errors are acceptable for a given text and target audience. This view

from the industry is confirmed by Guerrero and Gene (2021) when they

refer to “gaps and pains” when drafting MTPE guidelines. Their work

reports findings from the MTPE Training GALA Special Interest Group

which gathers representatives from all stakeholders in the translation

industry. According to the authors, the “gaps

and pains” refer to the following aspects: inconsistency in standards,

lack of transparency in existing guidelines which are usually kept as

internal documents by LSPs, overlapping between MTPE guidelines and

existing translation assignment instructions, subjectivity, and the

blurring of the classical distinction between MTPE levels. As a result,

translators miss the real scope of the MTPE project, tend to engage in

full MTPE and show lack of agreement on style between the different

guidelines available (Guerrero and Gene 2021: 9-10).

In this context, it holds true that translators need specific

linguistic and technical directions that help them overcome

uncertainty and take the appropriate decision when confronted by the

task with a “certain degree of tolerance and the ability to draw clear

boundaries between purely stylistic improvements and required linguistic

corrections” (Krings 2001: 16). After all, as Allen (2003: 306) pointed

out, “what most people really want to know is what are the actual

post-editing principles that support the post-editing concept.” In my

view, it is time to rethink this division of MTPE levels since applying

two clear-cut levels of MTPE not only results in subjective decisions

but also leaves out a grey zone where the translator is left alone with

no guiding principles. In fact, as O’Brien and Conlan (2019: 84) note,

the increasing use of NMT moves the boundaries between what is “human

translation” and what is “machine translation” in a way that the

distinction gets blurred. These moving boundaries also affect MTPE and

the way the task should be performed, creating some tension in the

process.

3. Tension factors in MTPE

When examining the different voices in translation that refer to MTPE

we usually observe some tension among the actors involved. On the one

hand, the exponential growth in digital content has forced a change in

models of translation with an “increased focus on productivity and

pressure on cost, along with further technologisation” (Moorkens 2017:

465-466). Accordingly, the industry tends to place MT at the core of its

business model, assigning translators a subsidiary role when

post-editing. On the other hand, different expectations from translators

foster the acceptance/resistance debate over the MTPE task and a

consideration of what it really entails: is it just revising and

editing, or is it another form of translation? This controversy gets

intensified when translators do not get clear instructions on how to

proceed, and clients, who are not experts in quality methodologies, are

unable to determine what types of errors are acceptable in an MTPE

request (Nunziatini and Marg 2020). The concept of acceptability

presents, then, an additional tension in MTPE since determining what is

acceptable or not is subordinate to the quality of the MT output and the

final use of the translated text. As a consequence, the notion of

translation quality is revised under a new paradigm that introduces the

idea of “fit for purpose” translation (Bowker 2020; Way 2018), where

quality is measured against the relative excellence of the final text in

its use for a particular purpose. This represents moving away from

idealistic quality assumptions of early developments in MT, and a

dissociation from the long-awaited desire of the computer science

community for MT to reach human parity (Toral 2020). While it is true

that NMT engines do provide better output quality as compared to

previous MT systems, the human translator is still key in identifying

errors and deciding whether they need to be post-edited.

In the following subsections I specifically refer to the different

aspects that, in my opinion, contribute to creating tension in MTPE,

namely: a) translators’ expectations towards MTPE guidelines; b) the

nature of MTPE; c) the movable nature of MTPE quality; and d) the effect

of NMT error typology. The discussion of these four factors will

establish the ground I will then use to further address the challenge of

defining MTPE.

3.1 Translators’

expectations towards MTPE guidelines

In the extensive literature on the subject of translators’ attitudes

towards MTPE (Blagodarna 2018; Cadwell et al. 2018; Ginovart

et al. 2020; Ragni and Vieira 2022; Vieira 2018; Teixiera and

O’Brien 2018, among others) there is little mention of how translators

perceive MTPE guidelines or how these affect the way the task is

performed. Factors affecting attitudes refer to price, productivity,

effort or cognitive load, but guidelines are seldom mentioned. To my

knowledge, Guerberof-Arenas (2013) is the first investigation on the

effect MTPE guidelines have on how translators perceive the post-editing

task. This study presents an analysis of the opinion of a group of 24

translators and 3 reviewers, who reported an open and flexible attitude

towards MT with some exceptions, “mainly because the quality of certain

MT segments was poor or the instructions too cumbersome to follow”

(Guerberof-Arenas 2013: 92-93). Price is also signalled as a definitive

cause for dissatisfaction, especially when combined with the many

demands from clients regarding the final quality of the text and the

numerous changes to be made if the quality expected was very high

(Guerberof-Arenas 2013: 82-86). This suggests that an improvement in

MTPE guidelines might contribute to better job satisfaction. More

recently, Vieira (2018), investigating translators’ blog and forum

postings, found that resistance from translators might be more a

question related to business practices than to technology itself. It is

my contention that providing a simplified way of performing MTPE, one

that is in line with how NMT performs, might contribute to better job

satisfaction. In this respect, it is interesting to note that studies of

how MTPE is actually conducted in the translation workflow (Silva 2014

or Plaza-Lara 2020, for example) do not make an explicit reference to

MTPE guidelines and it seems that they are taken for granted. Ginovart

et al. (2020) do mention MTPE instructions in their broad

survey of 66 translation stakeholders (including project managers, MT

specialists, linguists and academics) based in 19 different countries.

The survey thoroughly investigates how MTPE guidelines are defined and

implemented, but their effect on job satisfaction or task perception is

not explored.

In the usual narratives of resistance to MTPE, production processes

are seen as linear, with translators “cleaning up” errors introduced by

the machine (Mellinger 2018: 311). When MTPE takes place at the end of

the production line, the distinction of light and full post-editing is a

natural consequence of this conception. However, as do Carmo and

Moorkens (2021) indicate, MTPE is almost always done “using modern CAT

tools [where] MT suggestions appear intermingled with translation memory

matches as resources for translators to check and edit” (do Carmo and

Moorkens 2021: 39). This is seen by the authors as a natural evolution

that “makes MT a resource added to TM, and thus, the distinction between

editing TM suggestions and post-editing MT suggestions becomes less

obtrusive.” Data from practices in the industry confirm this, with a

typical project using only about 9% of MT suggestions and companies

relying heavily on recycled previously translated content (as reported

in TAUS 2020). The most usual workflow includes a combination of MTPE,

TM and human translation. If this is the case, why should criteria for

MTPE still stick to the division between light and full? MTPE is no

longer performed as a separate task from translation and this should

have a consequence in the way instructions are designed.

3.2 The nature of MTPE

The connection of MT to TM in a single platform has the immediate

effect of blurring the traditional boundaries between both technologies

(O’Brien and Colan 2019: 84). The source of the translation data gets

also blurred when the translator is presented with segments that come

either from the TM database or which are originated by the MT system.

When the translator is offered two possible alternative translations for

a given segment, one coming from the TM database and the other

originated by the built-in MT system, why should each segment be treated

differently? What is more, should the editing of a TM match be done

differently than post-editing an MT suggestion? In this context, the

difference between editing and MTPE often gets lost with the integration

of MT and TM in CAT systems (Jakobsen 2019; Sánchez-Gijón et

al. 2019). In a complementary line of argumentation do Carmo and

Moorkens (2021) understand that for MTPE to be a form of revision we

need to assume that the MT system provides a completed full translation,

but this is not really the case: “MT text is only an ‘output’ or a set

of ‘suggestions’ or ‘hypotheses’ for the translation of a text” (Do

Carmo and Moorkens 2021: 35-41). Only the translator is responsible for

the final text. The authors take a step further and advance that MTPE

should be considered as a type of translation. If this is so, why should

MTPE guidelines differ from those of translation? We can consider MTPE a

dynamic process where the translator constantly interacts with the

product of MT, revising the translation as the machine generates it. It

is in this interaction that translators take full control of the

process. This challenges the contention that MT is central to the

translation process and resituates the human element at the centre,

thus, invalidating the reductionist idea of the machine and the

translator competing for quality.

3.3 The movable quality

of the post-edited text

As the concept of MTPE progresses and gets closer to translation, I

see a need to revisit the concept of quality. Translators need to

determine what constitutes quality in order to decide when a segment

should be post-edited. However, defining quality is not straightforward

since MTPE introduces a grey zone where the threshold for accepted

quality is movable, and translation is no longer correct or incorrect

but rather acceptable for a given purpose. As Vashee (2021) advances,

the business value of a translation in the industry is usually not

defined by linguistic perfection but by a combination of factors: its

utility to the consumer, basic understandability,

availability-on-demand, and the overall impact on customer experience.

In general terms, “useable accuracy” matters more than perfect grammar

and fluency. In this respect, Moorkens (2017) points to the movable

characteristic of quality and defines acceptability thresholds according

to text lifespan. At some point, even raw MT output may be considered “a

worthwhile risk” (Moorkens 2017: 471) for highly perishable texts. This

is well illustrated, for instance, in the model that Nitzke et

al. (2019: 246) design for guiding the decision of using MT.

According to this model it is advisable to perform MTPE on texts with an

expected quality level of 60%-80% when the risk level of the texts is

low. For texts that require above 80% up to 100% quality, the model

recommends not using MT. In a similar tone, Plaza-Lara (2020: 173) shows

how quality in MTPE is subordinate to the quality of the MT output.

These approaches to quality reveal that the product of MTPE no longer

aspires to a translation similar to that produced by a human translator,

but rather as responding to the final use of the text. From this point

of view, the notion of MTPE levels (be they full or light) may lose

relevance and give way to a different concept of MTPE in which the

translator focuses on checking the correct use of terminology and

approving the translated content. This type of MTPE is in line with a

more flexible way of understanding quality, the so-called “fit for

purpose” (Bowker 2020; Way 2018).

Somehow this new conceptualisation of quality is a consequence of the

technologisation of translation. As Doherty (2017: 131) indicates “the

evolution and widespread adoption of translation technologies —

especially MT — have resulted in a plethora of typically implicit and

differently operationalised definitions of quality and respective

measures thereof”, which affects the decisions taken for evaluating

quality and “involve tensions between human subjectivity and machine

objectivity.” As the prescribed linear workflow evolves and MT gets

mixed with TM, “computer-assisted translation and MT systems further

blur the lines of translation quality, insofar as a third agent of text

production is introduced” (Mellinger 2018: 319). The new translation

workflow has a direct consequence on quality and the way the final text

is produced (via MT) adds an extra layer of mediation to translation and

revision with “external artefacts” that collaborate with the human

translator “toward the end goal of a quality translation” (Mellinger

2018: 321). The translator is no longer the single creator of the target

text but shares this responsibility with the machine.

3.4 The

effect of NMT error typology

An additional aspect that affects the criteria for defining MTPE

guidelines is the type of errors that NMT engines produce. Different

studies show that NMT systems outperform statistical MT systems in many

language combinations (particularly for morphologically rich languages),

and tend to produce more fluent translations, with improvements in

grammar but a possible degradation in lexical transfer (Bentivogli

et al. 2016; Neubig et al. 2015; Wu et al.

2016). Other improvements involve a reduction of word order errors, and

fewer morphological mistakes, which are anyway balanced by mixed results

for perceived adequacy (Moorkens 2017: 471). The research conducted by

Castilho et al. (2017) indicates similar results. It explores

possible improvements in NMT output in three particular domains:

e-commerce product listings, patent domain and Massive Open Online

Courses (MOOCs). Results show that NMT performs well in terms of fluency

but is inconsistent for adequacy, with a greater number of errors of

omission, addition and mistranslation (Castilho et al. 2017:

118). Obviously, we should take these findings with care as these are

lab tests that provide incomplete and limited results, with errors not

reproducible when a different MT system or language combination is used

(do Carmo and Moorkens 2021: 42). In any case, as Vieira (2019) points

out, the fact that NMT provides fluent translations means that errors

might be more difficult to spot. In this respect, the study conducted by

Koponen and Salmi (2017) with a group of post-editors reports that a

significant number of edits (34%) were unnecessary, even if they were

correct, but they did not represent actual errors in MT. They

investigated the quality of corrections for the pair English-Finnish,

questioning the assumption that the changes performed by translators are

correct and represent actual errors in MT. Similar findings are reported

by de Almeida (2013) and Temizöz (2016). A possible cause for this may

be that the practical implementation of guidelines is not necessarily

clear to translators, who may have different interpretations, especially

when style is concerned (Koponen and Salmi 2017: 140). In dealing with

MT errors, translators tend to overedit as they feel the urge to improve

“all linguistic aspects because they want to achieve perfect quality,

even though the guidelines state otherwise” (Nitzke and Gros 2021: 21),

actively looking for mistakes. Preferential changes referred to lexicon

(using synonyms or different terms), syntax reordering, changing style

according to register preferences, inserting and deleting words,

grammatical changes such as verb tense, using different spelling

variants, and inserting or deleting commas (Nitzke and Gros 2021: 28).

Similar findings are reported by Daems and Macken (2021). The authors

explore whether there is a difference between revising human translation

and post-editing machine translation, and the results show that a

significant number of preferential changes were made in all conditions.

In the case of post-editing, translators suggested more changes than

when they believed they were revising a text produced by a translator

(Daems and Macken 2021: 68-69).

As a consequence, translators need to be aware of which error types

they might encounter when post-editing, recognising “the special status

of the suggestions presented by MT systems, and their unpredictable

quality level” (do Carmo and Moorkens 2021: 40). In this context I see a

need for re-thinking MTPE guidelines in a way that is better adapted to

the actual situation in MTPE, one that caters for translators’

expectations and needs, the true nature of the task and the actual

capabilities of the MT system, and the types of errors that might be

expected.

4. MTPE guidelines ecosystem

In designing MTPE guidelines I use the framing of the

ecosystem as, in my opinion, it allows for a broad

conceptualization of the different aspects involved as discussed so far.

The metaphor of the translation ecosystem originates from situational

models of translation that conceptualise the translation process as a

complex system. This includes not only the translator, but also other

people (cooperation partners), their specific social and physical

environments as well as their cultural artefacts (Risku 2004:19). It is

in this broad context that I make a proposal for defining MTPE

guidelines.

The remainder of this paper presents the different elements in the

MTPE ecosystem: 1) situated information; 2) the text to be post-edited;

and 3) MTPE instructions. Figure 1 below represents the guidelines

ecosystem, with a mapping of its elements to tension factors and an

indication of expected positive effects on the MTPE process.

Figure 1. MTPE guidelines ecosystem, tension factors and

positive effects

After presenting the different parts of the MTPE guidelines

ecosystem, I discuss how these contribute to easing MTPE tension

factors, fostering translators’ agency in the process and contributing

to creating clear and unambiguous MTPE directions.

Situated information refers to the contextual information needed for

defining MTPE guidelines. I borrow the concept of situated information

from Krüger’s (2016a, 2016b) situational model of translation technology

as it provides a comprehensive view that is suitable for examining MTPE

(Rico Pérez and Sánchez Ramos 2023). Inspired by situated translation

theory (Risku 2004, 2010), Krüger creates a model applicable to

translation technology that is premised on the assumption that the

translator is the central agent in the translational ecosystem. The

essential components of this ecosystem are: 1) the psychosocial factors

that affect the cognitive process of translation; 2) the different types

of artefacts (or resources) that facilitate the translation process; and

3) the cooperation partners and users (Krüger 2016a). These three

aspects are essential for defining MTPE guidelines and are reviewed

below.

4.1.1 Psychosocial

aspects

When translators are situated as the central agents of the MTPE

process, collecting data on psychosocial aspects is essential as these

help them to tune in their expectations to real data. According to

Krüger’s situated model, psychosocial aspects refer to the working

environment and the professional status, as well as factors such as time

pressure and motivation (Krüger 2016a: 318-327). These are integral

components that affect the cognitive process of translation and also the

post-editing task. Among the aspects to be included in the MTPE

guidelines ecosystem, translators should be provided with information on

rates, the required scope of the service and the schedule allowed for

the project. The question of MTPE rates usually raises controversy among

translators since MTPE is often seen as a way of saving money by LSPs

which “is very likely the source of resentment expressed amongst

translators and PMs [Project Managers].” when referring to MTPE

(Sakamoto 2018: 8). This is why information on rates, and how these are

calculated, is important to translators. Calculating rates is not an

easy task, and different approaches can be taken, either as an

ex-ante model (establishing a rate before the completion of the

project) or as an ex-post model (calculating the actual work

performed according to productivity) (Plaza-Lara 2020: 171). As for the

other two aspects, project scope and schedule, the information provided

should serve the translator to understand how the MTPE project fits in

the overall service commissioned by the client, how time is to be

managed, whether there are any pre-production processes that affect the

MTPE project, how the translator is receiving the raw MT output, and the

MTPE project workflow.

4.1.2 Resources

In Krüger’s situated model, translation technology forms an integral

part of the translator’s cognition together with other environmental

artefacts (Krüger 2016b: 121). The complete list of resources examined

by Krüger (2016a: 320-326) refers to general working aids (office

equipment, furniture, communication devices), digital research and

communication resources (corpora, blogs, forums), translation technology

in a wider sense (text processing software, file manager, checkers,

etc.) and translation technology in a narrow sense (TM systems,

terminology management, alignment tools, MT systems and project

management tools). It is the latter category that, in my opinion,

precisely affects the MTPE guidelines ecosystem as it determines the

very nature of the project and how it is to be performed. In this

respect, the translator specifically needs information on the following

aspects:

- Glossary availability.

- MT engine, training data, and interaction with translation

memories (if any).

- How MTPE is to be performed, either as a static activity or in a

dynamic workflow (Vieira 2019: 322). On the one hand, if we consider it

as something static, MTPE takes place once the MT program has generated

the translation and the translator intervenes at the end of the process.

On the other hand, in the dynamic paradigm, MTPE is carried out in a

continuous interaction of the translator with the machine, where the MT

systems reacts to, and learns from the human edits.

4.1.3

Cooperation partners and users

Cooperation partners are an essential part of the MTPE ecosystem and,

according to Krüger (2016a: 316), take the following roles: translation

initiator, commissioner, ST producer, TT user, TT receiver,

co-translator, proof-reader and project manager. In order to perform an

adequate MTPE task, translators need information on these partners and

the different responsibilities and requirements since, in the end, these

also determine how the MTPE project is conceived, who is to cooperate

with whom, how the work is organised and which are the expectations of

the final user of the translation.

4.2 The text

The second component in the MTPE ecosystem is the text (Figure 1).

Information about the text to be post-edited is essential to the

translator on the following aspects:

- Domain: this refers to the specification of content subject area,

and would be useful for identifying the most adequate specialised

translator to perform the task.

- Potential risk of the information in safety-critical contexts

(Canfora and Ottmann 2020; Nitzke et al. 2019).

- Content lifespan: as seen in Section 3.3. content lifespan

influences MTPE and marks the threshold for acceptable quality. A

minimum quality threshold can even be acceptable for raw MT output in

content with a very short lifespan (Moorkens 2017: 471).

- Communicative purposes of the text: either the text is used for

internal communication purposes or for external communication. This is

related, together with content lifespan, to the way quality is

perceived.

4.3 MTPE instructions

This is the last element in the MTPE guidelines ecosystem and one of

its fundamental parts. Ideally, criteria for MTPE guidelines should take

into account the following aspects:

- They should include instructions that are more than just

mechanically cleaning errors in the MT output.

- They should recognise that MT output is integrated with output

from other tools (mostly TM and terminology).

- They should provide a flexible way of understanding

quality.

- They should help translators understand the likely error types of

the MT system they are dealing with.

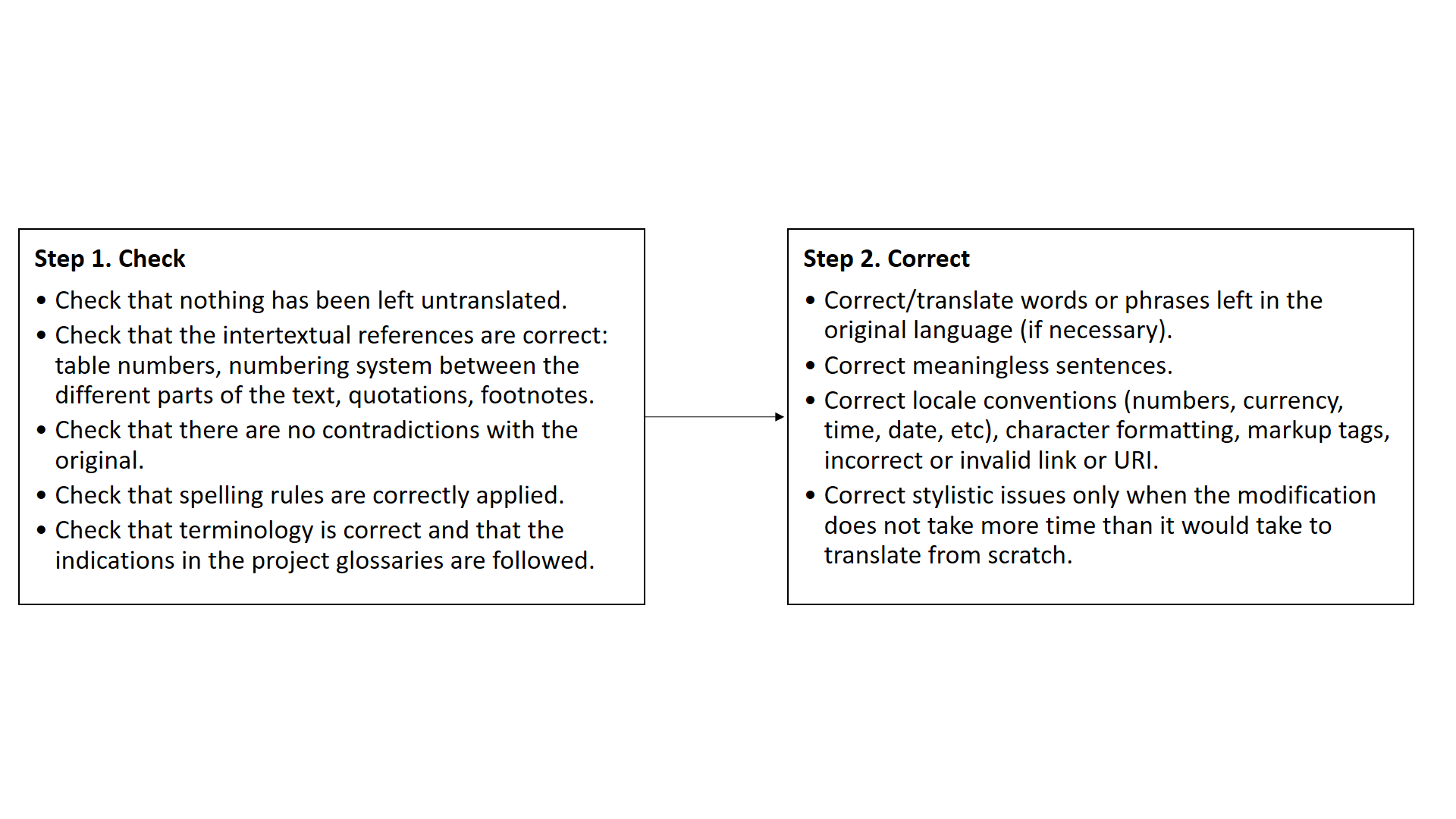

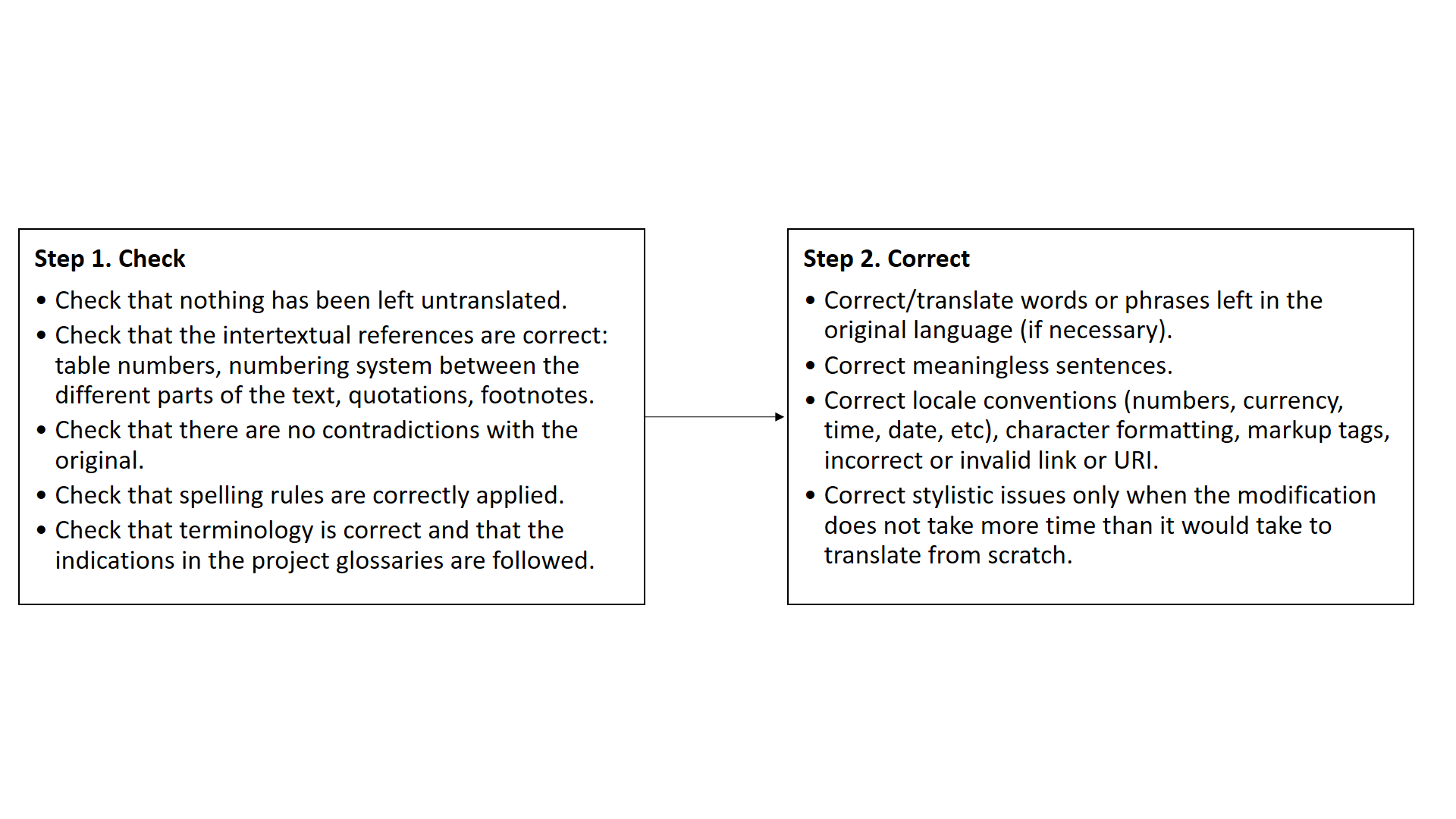

In order to better organise instructions I suggest preparing two

complementary sets: general instructions and language-specific

instructions.

4.3.1 General

instructions

When outlining general instructions, we need to take into account

translators’ expectations towards clear and unambiguous guidelines (see

section 3.1 above). It is in this respect that I suggest breaking away

from the traditional approach of light vs. full MTPE, as already

discussed, and advance a proposal that formulates general instructions

as grouped into two complementary tasks: check and correct. In the first task

(check), the translator examines the MT output against the

source text, while in the second (correct), work concentrates

on the MT output in order to make the necessary corrections according to

the quality threshold determined previously by the preceding elements in

the MTPE ecosystem. The different actions involved in each task are

shown in figure 2.

Figure 2. Check and correct: actions involved

4.3.2

Language-specific instructions

Together with general instructions, language-specific guidelines are

relevant since, as Sarti et al. state (2022), NMT post-editing

performance is highly language-dependent and influenced by source-target

typological relatedness. Language-specific rules for MTPE indicate, for

example, the use of a particular language locale, lexical collocations

or specific sentence structures (see, for instance, the work of Mah

2020). It is key to provide translators with a set of representative

examples for each of the languages so they know what to expect from the

MT output, how to deal with the different error types and what MTPE

implies in each case.

4.3.3 Matching

MTPE guidelines to MTPE tension factors

Framing MTPE guidelines in the ecosystem as described above has, in

my opinion, the positive effect of easing tension factors in the MTPE

process. I will return now to Figure 1 and show how each element in the

ecosystem matches each tension factor. The correspondences are drawn in

different lines (see Figure 1).

- Lines a and b. These match the elements of

situated information (i.e. psychosocial aspects, resources and

cooperation partners) to the nature of MTPE and the movable quality of

the post-edited text. Line a, relating situated information to

the nature of MTPE, is drawn on the following grounds. We have seen how

the interaction of MT and TM has the effect of blurring the source of

data the translator deals with. This has the effect of turning MTPE into

a type of translation, as discussed in section 3.2. In other words, the

nature of MTPE is affected by the way the task is performed in

interaction with MT and TM. In this context, translators are able to

understand the true nature of the MTPE task when provided with

information on aspects that affect the project as a whole: working

environment, deadline(s) and rates, glossary availability, MT engine

used in the translation, the MTPE workflow, and who is participating in

the project.

Line b establishes a more specific relationship of resources

and cooperation partners to the movable quality of the post-edited text.

As argued above, quality in MTPE is a movable concept determined by a

series of factors such as the utility of the text, its impact on

customer experience or its lifespan. Different quality thresholds can be

defined in a more flexible way of understanding quality. In this

connection, translators are able to understand this movable quality when

they are equipped with information on which MT engine is used and the

expected output quality, which are the client’s expectations and how the

final text is to be used. The positive effect of providing translators

with all this information is that they secure agency in the MTPE process

by fully understanding the nature of the task and approaching quality as

dependant on several factors.

- Line c. It represents how information about text

characteristics helps the translator to decide how best MTPE should be

performed. When translators know the text characteristics, its expected

lifespan, its communicative purposes, or potential risks, they are able

to decide which quality threshold is more adequate for the post-edited

text. This again brings agency to the process as translators regain

control by deciding how best MTPE is to be conducted.

- Lines d and e. Both of these refer to how MTPE

instructions contribute to relieving tension factors both in

translators’ expectations towards MTPE and in how to deal with NMT

errors. I have already discussed how translators find difficulties in

interpreting guidelines for several reasons: either they are too

cumbersome to follow or too abstract, they might also overlap, or even

show grey zones which are not covered by the division into light and

full. I believe that the tension created by vague instructions can be

overcome by providing a simplified list of actions, as in “check and

correct”, which are also specifically tuned to typical NMT errors for

language-specific pairs. This would have the positive effect for the

translator of working with clear and unambiguous directions.

The decision-making process involved in defining MTPE guidelines is

not a straightforward task. MTPE levels are subordinate to the quality

of the MT output, with several other issues involved: subjectivity in

the task, client’s expectations, effort and expected productivity. In

this task, translators are traditionally presented with a set of

guidelines divided into light vs full MTPE levels. In view of the latest

developments in NMT and the higher quality level of the output they

provide, my proposal is that this traditional division is no longer

valid. NMT systems outperform previous developments in many language

combinations and tend to provide highly fluent output with fewer errors.

Accordingly, MTPE guidelines should break away from MTPE levels as these

get blurred.

This paper challenges the traditional division of MTPE levels and

presents a proposal for a new way of looking at MTPE guidelines. After a

review of the relevant literature on MTPE guidelines, I have discussed

why defining criteria for MTPE is not a straightforward task. I have

then presented some factors that create tension in MTPE and influence

the way guidelines should be designed. The core of the paper

concentrates on the definition of a set of MTPE criteria in what I call

the MTPE ecosystem. This ecosystem contributes to creating clear and

unambiguous directions, with the translator regaining agency in the MTPE

process. This paper is an attempt at designing a model for MTPE

guidelines which supports translators in the process. The model is

conceived in a way that tension factors can be eased and negative

attitudes towards MTPE are prevented. I have argued how guidelines tend

to be either too general or too context-specific to be replicated

straightaway. In this sense, the model I present is a valuable

instrument as it collects in a single source all aspects influencing the

translator decision so that MTPE guidelines can be easily drawn,

adequately supported with actual examples and, what is more important,

shared and replicated along different MTPE projects.

The model still needs to be validated and, in this connection, I see

potential avenues for future research in a series of aspects. The first

refers to the question of the informational or cognitive load involved

in the proposed ecosystem. I pointed out that many current MTPE

guidelines are perceived by translators as being overly cumbersome, and,

consequently, the MTPE model I present also needs to be tested on this

regard. In the translation industry, the rationale for using MTPE relies

on the assumption that it requires less effort than translation “from

scratch”, but, as different studies show (Koglin and Cunha 2019; Koponen

2016; Moorkens 2018; Vieira 2017, among others), the cognitive load to

identify errors and decide on corrections is high. Therefore, the

likelihood of translators being able to access and having the time to

process the information required in the ecosystem model needs to be

tested. The question would be ideally addressed by participant-oriented

empirical studies of the adoption and perception of the MTPE ecosystem

in the line of the works of Cadwell et al. (2016), Rossi and

Chevrot (2019) or Schnierer (2019). A second and complementary question

is how the MTPE model can be actually implemented in a working

environment. In studying working conditions, Liu (2020) reports that

translators assign a great value to accessing all kinds of information

that might help them understand the translation assignment, including

the possibility of communicating with clients, authors or end-users, or

knowing the intended use of the translation. Even if this study does not

explicitly refer to MTPE guidelines, we can infer that having access to

them might facilitate the translators’ work. It has been established in

several studies (see, for instance, Drugan 2017) that translators can

struggle to get even basic information about a translation’s broader

context, and at some points they may not be able to get support when

they face problems or challenges at work. Implementing MTPE guidelines

can take the form of simple data sets each containing the required

information for each of the elements in the ecosystem. We find this type

of implementation for post-editing rule-based machine translation output

in Rico Pérez and Díez Orzas (2013), where the data sets provide

practical information on the PE project in terms of full versus light

post-editing. A potential area for research would be how these data sets

adapt to MTPE ecosystem requirements, and whether putting them into

practice contributes in any way to improving job satisfaction. It is my

contention that providing a simplified way of performing MTPE can

contribute to a better understanding of the task and, consequently, to

reducing resistance from translators, who might feel a greater sense of

agency and have a greater confidence in the utility of MT.

References

- Allen, Jeff (2003). “Post-editing.” Harold

Somers (ed.) (2003). Computers and Translation. A translator’s

guide. Amsterdam/Philadelphia: John Benjamins, 297-317.

- Bentivogli, Luisa, Arianna Bisazza, Mauro Cettolo, and

Marcello Federico (2016). “Neural versus Phrase-Based Machine

Translation Quality: A Case Study.” Proceedings of the 2016

Conference on Empirical Methods in Natural Language Processing,

257–267, Austin, Texas, November 1-5, 2016.

https://aclanthology.org/D16-1025.pdf (consulted 13.06.2023).

- Blagodarna, Olena (2018). “Insights into

post-editors’ profiles and post-editing practices.” Revista

Tradumàtica. Tecnologies de la Traducció 16, 35-51.

- Bowker, Lynne (2020). “Fit-for-purpose

translation.” Minako O’Hagan (ed) (2020). The Routledge Handbook of

Translation and Technology. London: Routledge, 453-468.

- Bundgaard, Kristine and Tina Paulsen Christensen

(2019). “Is the Concordance Feature the New Black? A Workplace Study of

Translators’ Interaction with Translation Resources while Post-Editing

TM and MT Matches.” The Journal of Specialised Translation, 31,

14–37. https://doi.org/10.26034/cm.jostrans.2019.175 (consulted

13.06.2023).

- Cadwell, Patrick, Sheila

Castilho, Sharon

O'Brien, and Linda

Mitchell (2016). “Human factors in machine translation and

post-editing among institutional translators.” Translation

Spaces 5(2), 222-243.

- Cadwell, Patrick, Sharon O’Brien, and Carlos S. C.

Teixeira (2018). “Resistance and accommodation: factors for the

(non-) adoption of machine translation among professional translators.”

Perspectives 26(3), 301-321.

- Canfora, Carmen and Angelika Ottmann (2020).

“Risks in neural machine translation.” Translation Spaces 9(1),

58-77.

- Carl, Michael, Barbara Dragsted, Jakob Elming, Daniel

Hardt, and Arnt Lykke Jakobsen (2011). “The Process of

Post-Editing: A Pilot Study.” Copenhagen Studies in Language

41, 131-142.

- Castilho, Sheila, Joss Moorkens, Federico Gaspari, Iacer

Calixto, John Tinsley, and Andy Way (2017). “Is Neural Machine

Translation the New State of the Art?” The Prague Bulletin of

Mathematical Linguistics 108, June, 109–120

https://ufal.mff.cuni.cz/pbml/108/art-castilho-moorkens-gaspari-tinsley-calixto-way.pdf

(consulted 13.06.2023).

- Daems, Joke and Lieve Macken (2021).

“Post-editing human translations and revising machine translations.”

Maarit Koponen, Brian Mossop, Isabelle S. Robert and Giovanna Scocchera

(eds) (20219. Translation Revision and Post-editing. Industry

Practices and Cognitive Processes. London: Routledge,

50-70.

- de Almeida, Giselle (2013). Translating the

post-editor: An investigation of post-editing changes and correlations

with professional experience across two romance languages. PhD

thesis, Dublin City University. http://doras.dcu.ie/17732/ (consulted

13.06.2023).

- DePalma, Don (2013). Post-editing in

practice.

https://www.tcworld.info/e-magazine/translation-and-localization/post-editing-in-practice-459/

(consulted 13.06.2023).

- do Carmo, Felix (2020). “Time is money’ and the

value of translation.” Translation Spaces 9(1), 35–57.

- do Carmo, Felix and Joss Moorkens (2021).

“Differentiating editing, post-editing and revision.” Maarit Koponen,

Brian Mossop, Isabelle S. Robert and Giovanna Scocchera (eds) (2021).

Translation revision and post-editing. London: Routledge,

35-49.

- Doherty, Stephen (2017). “Issues in Human and

Automatic Translation Quality Assessment.” Dorothy Kenny (ed.) (2017).

Human issues in translation technology. London: Routledge,

131-148.

- Drugan, Joanna (2017). “Ethics and social

responsibility in practice: Interpreters and translators engaging with

and beyond the profession.” The Translator 23(2),

126–142.

- ELIS (2023). European Language Industry

Survey. Trends, expectations and concerns of the European language

industry. ELIS Research.

https://elis-survey.org/wp-content/uploads/2023/03/ELIS-2023-report.pdf

(consulted 13.06.2023).

- EUATC (2013). EUATC Survey. Expectations and

Concerns of European Translation Companies.

https://elis-survey.org/wp-content/uploads/2022/02/2013-EUATC-Survey-Report.pdf

(consulted 13.06.2023).

- Flanagan, Marian and Tina Paulsen Christensen

(2014). “Testing post-editing guidelines: How translation trainees

interpret them and how to tailor them for translator training purposes.”

The Interpreter and Translator Trainer 8(2), 276–294.

- Ginovart Cid, Clara, Carme Colominas, and Antoni

Oliver (2020). “Language industry views on the profile of the

post-editor.” Translation Spaces 14, 283 – 313.

- Guerberof-Arenas, Ana (2013). “What do

professional translators think about post-editing?” The

Journal of Translation Studies, 19, 75-95

https://www.jostrans.org/issue19/art_guerberof.pdf (consulted

13.06.2023).

- Guerrero, Lucia and Viveta Gene (2021).

“Workshop: Drafting effective machine translation post-editing

guidelines.” TC43 Asling, 18th Nov. 2021

https://www.asling.org/tc43/wp-content/uploads/2021/11/TC43-Day3-WS-GuerreroGene-Drafting-eff-MTPE-guidelines.pdf

(consulted 13.06.2023).

- Hu, Ke and Patrick Cadwell (2016). “A

Comparative Study of Post-editing Guidelines.” Baltic Journal of

Modern Computing, 4(2), 346-353.

- ISO (International Organization for

Standardization) (2017). Translation Services – Post-Editing of

Machine Translation Output – Requirements. ISO 18587:2017. Geneva:

International Organization for Standardization.

https://www.iso.org/standard/62970.html (consulted 13.06.2023).

- Jakobsen, Arnt Lykke (2019). “Moving

translation, revision and post-editing boundaries.” Helle Dam, Matilde

Brøgger, Karen Zethsen (eds) (2019). Moving Boundaries in

Translation Studies. London: Routledge, 64-80.

- Koglin, Arlene and Rossana Cuhna (2019).

“Investigating the post-editing effort associated with

machine-translated metaphors: a process-driven analysis.” The

Journal of Specialised Translation 31, 38-59.

- Koponen, Maarit (2016). “Is machine translation

post-editing worth the effort? A survey of research into post-editing

and effort?” The Journal of Specialised Translation 25,

131-148.

- Koponen, Maarit and Leena Salmi (2017).

“Post-editing quality: Analysing the correctness and necessity of

post-editor corrections.” Linguistica Antverpiensia, New Series:

Themes in Translation Studies 16, 137–148.

- Krings, Hans P. (2001). Repairing Texts.

Empirical Investigations of Machine Translation Post-Editing

Processes. Kent: The Kent State University Press.

- Krüger, Ralph (2016a). “Situated LSP Translation

from a Cognitive Translational Perspective.” Lebende Sprachen

61(2), 297–332.

- Krüger, Ralph (2016b). “Contextualising

Computer-Assisted Translation Tools and Modelling Their Usability.”

trans-kom 9(1) 114-148.

https://www.iso.org/standard/62970.html

(consulted 13.06.2023).

- Lacruz, Isabel, Gregory M. Shreve, and Erik

Angelone (2014). “Pauses and Cognitive Effort in Post-editing.”

Workshop on Post-Editing Technology and Practice, San Diego,

California, USA. Association for Machine Translation in the Americas.

https://aclanthology.org/2012.amta-wptp.3/ (consulted

13.06.2023).

- Liu, Christy Fung-Ming (2020). “How Should

Translators Be Protected in the Workplace? Developing a Translator

Rights Model Inventory.” Hermēneus. Revista de Traducción e

Interpretación 22, 243-269.

- Mah, Seung-Hye (2020). “Defining language

dependent post-editing guidelines for specific content.” Babel.

Revue internationale de la traduction / International Journal of

Translation 66(4-5), 811-828.

- Massardo, Isabella, Jaap van der Meer, Sharon O’Brien,

Fred Hollowood, Nora Aranberri, and Katrin Drescher (2016).

Machine Translation Post-editing Guidelines.

https://www.taus.net/insights/reports/taus-post-editing-guidelines

(consulted 13.06.2023).

- Mellinger, Christopher D. (2018). “Re-thinking

translation quality.” Target 30(2), 310-331.

- Moorkens, Joss (2017). “Under pressure:

translation in times of austerity.” Perspectives 25(3),

464-477.

- Moorkens, Joss (2018). “Eye tracking as a

measure of cognitive effort for post-editing of machine translation.”

Callum Walker and Federico M. Federici (eds) (2018). Eye Tracking

and Multidisciplinary Studies on Translation. Benjamins Translation

Library, 143, 55-69.

- Neubig, Graham, Makoto Morishita, and Satoshi

Nakamura (2015). “Neural Reranking Improves Subjective Quality

of Machine Translation: NAIST at WAT2015.” Proceedings of the 2nd

Workshop on Asian Translation (WAT2015), Kyoto, Japan. Workshop on

Asian Translation, 35–41.

- Nitzke, Jean and Anne-Kathrin Gros (2021).

“Preferential changes in revision and post-editing.” Maarit Koponen,

Brian Mossop, Isabelle S. Robert and Giovanna Scocchera (eds) (2012)

Translation Revision and Post-editing. Industry Practices and

Cognitive Processes. London: Routledge, 21-34.

- Nitzke, Jean, Silvia Hansen-Schirra, and Carmen

Canfora (2019). “Risk management and post-editing competence.”

The Journal of Specialised Translation Issue 31,

239-259.

- Nitzke, Jean and Silvia Hansen-Schirra (2021).

A short guide to post-editing (Translation and Multilingual

Natural Language Processing 16). Berlin: Language Science

Press.

- Nunziatini, Mara and Lena Marg (2020). “Machine

Translation Post-Editing Levels: Breaking Away from the Tradition and

Delivering a Tailored Service.” Proceedings of the EAMT

https://aclanthology.org/2020.eamt-1.33.pdf (consulted

13.06.2023).

- O’Brien, Sharon (2011a). Introduction to

Post-Editing: Who, What, How and Where to Next?

https://aclanthology.org/2010.amta-tutorials.1.pdf (consulted

13.06.2023).

- O’Brien, Sharon (2011b). Towards predicting

post-editing productivity. Machine Translation, 25(3),

197-215.

- O’Brien, Sharon and Owen Conlan (2019).

“Personalising translation technology.” Helle Dam, Matilde

Brøgger, Karen Zethsen (eds) (2019). Moving Boundaries in

Translation Studies. London: Routledge, 81-97.

- O’Brien, Sharon, Johann Roturier, and Giselle de

Almeida (2009). “Post-Editing MT Output. Views for the

researcher, trainer, publisher and practitioner.” MT Summit 2009

tutorial. https://aclanthology.org/www.mt-archive.info/MTS-2009-OBrien-ppt.pdf

(consulted 13.06.2023).

- Plaza-Lara, Cristina (2020). “How does machine

translation and post-editing affect project management? An

interdisciplinary approach.” Hikma 19 (2), 163-182.

- Ragni, Valentina and Lucas Nunes Vieira (2022).

“What has changed with neural machine translation? A critical review of

human factors.” Perspectives 30(1), 137-158.

- Rico Pérez, Celia and Pedro L. Díez Orzas

(2013): “EDI-TA: Post-editing methodology for Machine Translation.”

Multilingualweb-LT Deliverable 4.1.4. Annex I, public report https://www.w3.org/International/multilingualweb/lt/wiki/images/1/1f/D4.1.4.Annex_I_EDI-TA_Methology.pdf

(consulted 13.06.2023).

- Rico Pérez, Celia and María del Mar Sánchez

Ramos (2023). “The Ethics of Machine Translation Post-editing

in the Translation Ecosystem.” Carla Parra and Helena Moritz (eds)

(2023), Towards Responsible Machine Translation. Ethical and Legal

Considerations in Machine Translation. Book series Machine

Translation: Technologies and Applications. Springer, 95-113.

- Risku, Hanna (2004). Translationsmanagement.

Interkulturelle Fachkommunikation im Kommunikationszeitalter.

Tübingen, Narr.

- Risku, Hanna (2010). “A Cognitive Scientific

View on Technical Communication and Translation. Do Embodiment and

Situatedness Really Make a Difference?” Target 22(1),

94–111.

- Rosi, Caroline and Jean-Pierre Chevrot (2019).

“Uses and perceptions of machine translation at the European

Commission.” Journal of Specialised Translation 31,

177-200.

- Sakamoto, Akiko (2018). “Unintended Consequences

of Translation Technologies: from Project Managers’ Perspectives.”

Perspectives 27(1), 58-73.

- Sakamoto, Akiko (2019). “Why do many

translators resist post-editing? A sociological analysis using

Bourdieu’s concepts.” Journal of Specialised Translation

31, 201-216.

- Sakamoto, Akiko and Masaru Yamada (2020).

“Social groups in machine translation post-editing. A SCOT analysis.” Translation

Spaces 9(1), 78–97.

- Sánchez-Gijón, Pilar, Joss Moorkens and Andy Way

(2019). “Post-editing neural machine translation versus translation

memory segments.” Machine Translation 33(1-2), 31–59.

- Sarti, Gabriele, Arianna

Bisazza, Ana

Guerberof-Arenas, and Antonio

Toral (2022). “DivEMT: Neural Machine Translation

Post-Editing Effort Across Typologically Diverse Languages.”

Proceedings of the 2022 Conference on Empirical Methods in Natural

Language Processing.

https://preview.aclanthology.org/emnlp-22-ingestion/2022.emnlp-main.532/

(consulted 13.06.2023).

- Silva, Roberto (2014). “Integrating Post-Editing

MT in a Professional Translation Workflow.” Sharon O'Brien, Laura

Winther Balling, Michael Carl, Michel Simard and Lucia Specia (eds)

(2014). Post-editing of Machine Translation: Processes and

Applications, Newcastle, Cambridge Scholars Publishing.

- Schnierer, Madeleine (2021). “Revision and

quality standards: do translation service providers follow

recommendations in practice?” Maarit Koponen, Brian Mossop, Isabelle S.

Robert and Giovanna Scocchera (eds). Translation Revision and

Post-editing. Industry Practices and Cognitive Processes. London:

Routledge, 109-130.

- TAUS (2010). Machine Translation

Post-editing Guidelines produced in partnership with CNGL (Centre

for Next Generation Localisation).

http://www.translationautomation.com/guidelines/ (consulted 13.06.2023).

- TAUS (2020). DQF

Business Intelligence Bulletin, September 2020.

https://info.taus.net/taus-business-intelligence-bulletin-september-2020

(consulted 13.06.2023).

- Teixeira, Carlos S. C. and Sharon O’Brien

(2017). “Investigating the cognitive ergonomic aspects of translation

tools in a workplace setting.” Translation

Spaces (6)1, 79-103.

- Temizöz, Özlem (2016). “Postediting machine

translation output: Subject-matter experts versus professional

translators.” Perspectives, 24(4), 646–665.

- Toral, Antonio (2020). “Reassessing Claims of

Human Parity and Super-Human Performance in Machine Translation at WMT

2019.” Proceedings of EAMT 2020.

https://aclanthology.org/2020.eamt-1.20.pdf (consulted

13.06.2023).

- Vashee, Kirti (2021). “The Challenge of Defining

Translation Quality.” GALA Knowledge Center.

https://www.gala-global.org/knowledge-center/professional-development/articles/challenge-defining-translation-quality

(consulted 13.06.2023).

- Vieira, Lucas Nunes (2017). “Cognitive effort

and different task foci in post-editing effort and post-edited quality

of machine translation: a think-aloud protocol.” Across Languages

and Cultures 18(1), 79-105.

- Vieira, Lucas Nunes (2018). “Automation anxiety

and translators.” Translation Studies 13(1), 1-21.

- Vieira, Lucas Nunes (2019). “Post-editing of

Machine translation.” Minako O’Hagan (ed.) (2019) The Routledge

Handbook of Translation and Technology. London: Routledge,

319-336.

- Vieira, Lucas Nunes and Elisa Alonso (2019). “Post-editing

in practice – Process, product and networks” The Journal of

Specialised Translation, 31, 2-13

https://doi.org/10.26034/cm.jostrans.2019.173 (consulted

13.06.2023).

- Way, Andy (2018). “Quality expectations in

Machine Translation.” Joss Moorkens, Sheila Castilho, Federico Gaspari

and Stephen Doherty (eds) (2018). Translation Quality Assessment.

From Principles to Practice. Cham: Springer, 159-178.

- Wu Yonghui, Mike

Schuster, Zhifeng

Chen, Quoc V. Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun,

Yuan Cao, Qin Gao, Klaus Macherey, Jeff Klingner, Apurva Shah, Melvin

Johnson, Xiaobing Liu, Łukasz Kaiser, Stephan Gouws, Yoshikiyo Kato,

Taku Kudo, Hideto Kazawa, Keith Stevens, George Kurian, Nishant Patil,

Wei Wang, Cliff Young, Jason Smith, Jason Riesa, Alex Rudnick, Oriol

Vinyals, Greg Corrado, Macduff Hughes, and Jeffrey Dean (2016).

Google’s Neural Machine Translation System: Bridging the Gap between

Human and Machine. https://arxiv.org/abs/1609.08144 (consulted

13.06.2023).

Biography

Celia Rico Pérez is currently a

Visiting Professor in Translation Technology at Universidad Complutense

de Madrid. Her research focuses on the use of machine translation and

other translation technologies, machine translation post-editing and

quality evaluation.

Celia Rico Pérez is currently a

Visiting Professor in Translation Technology at Universidad Complutense

de Madrid. Her research focuses on the use of machine translation and

other translation technologies, machine translation post-editing and

quality evaluation.

ORCID: 0000-0002-5056-8513

E-mail: celrico@ucm.es

Celia Rico Pérez is currently a

Visiting Professor in Translation Technology at Universidad Complutense

de Madrid. Her research focuses on the use of machine translation and

other translation technologies, machine translation post-editing and

quality evaluation.

Celia Rico Pérez is currently a

Visiting Professor in Translation Technology at Universidad Complutense

de Madrid. Her research focuses on the use of machine translation and

other translation technologies, machine translation post-editing and

quality evaluation.