No audience left behind, one App fits all: an integrated approach to accessibility services

Estella Oncins and Pilar Orero, Universitat Autònoma de Barcelona

ABSTRACT

Most academic research on Audiovisual Translation and specifically on Media Accessibility has focused on content creation. If media content today can be compared to the visible tip of the iceberg, technology represents the unknown hidden part. Accessibility services are epigonic to media content, presenting an intimate relationship between technology for delivery and technology for consumption. Technology allows not only for the production of media content and access services, but also elicits new formats and interactions.

This paper focuses on accessibility services delivery. The first part provides a brief on state-of-the-art accessibility apps available in the market today, including emerging trends and market uptake. Section 2 presents audience needs away from the classical medical classification of assistive technologies and an analysis of existing accessibility apps. Section 3 presents a novel solution that is currently available in the market for transmedia storytelling. The new app has been designed to provide personalised access, away from one-size-fits-all services.

Keywords

Accessibility, distribution, personalisation, large audience, live events.

Introduction

Smartphones have become one of the most popular ways to consume media content (Casetti and Sampietro 2013, Weinel and Cunningham 2015) , with particular focus on movies (Hessels 2017). The distribution of media content in a live condition and the associated accessibility files presents an interesting challenge, with far too many variables for a single, comprehensive solution (Oncins et al. 2013). A Live condition becomes even more complex when audience needs regarding interaction are taken into consideration (Linke-Ellis 2012). No single solution will work for all audiences. Nevertheless, a personalised modular system that is adaptable to user requirements will facilitate the comprehensive delivery of live accessibility services.

In the past 10 years many apps have been designed to cater for accessibility services and to subjugate communication barriers for persons with disabilities (Oncins 2014). This follows the EU legislation towards accessibility with the updated Audiovisual Media Services Directive 2018/1808 and the European Accessibility Act, Directive (EU) 2019/882, and complies with United Nations Convention on the Rights of Persons with Disabilities (UN CRPD 2008). However, accessibility in live events remains problematic.

The main issues are threefold: the cost of the event, the low return on investment and resilience. It is also an issue from a technological standpoint in terms of service distribution or display, neither of which are usually available in open air or closed conditions.

Nowadays, accessibility services at live events are provided in three ways:

- Venues: with in-house accessibility departments. They are usually limited to a single accessibility modality, namely subtitles or surtitles. These tend to be used in opera houses. These subtitles or surtitles are not specifically intended for audiences with hearing loss.

- Service providers: with mobile infrastructure, it is usually a small and medium-size enterprise (SME) who goes to the venue, installs the equipment and provides the accessibility service.

- Freelancers: mainly hired from service providers to deliver accessibility services in a venue.

It should be highlighted that these three provisions often interact in order to provide an additional accessibility service. An example would be an opera house that regularly offers subtitling services but requires sign language interpretation or audio description for one specific performance. In this case a service provider company or freelance expert will be contracted. The delivery a new ad-hoc service for only one live performance may imply ephemeral and costly technological solutions.

2. Audience needs and expectations

According to the latest data provided from the European Health and Social Integration Survey (EHSIS 2012a), there are more than 70 million people with a disability in the European Union (EU), which means a disability rate of 17.6% in the total population of the EU27 aged 15 and over. In addition, the process of ageing is increasing the number of EU citizens deemed as having some type of impairment.

According to the data, there are an estimated 84 to 107 million people with a disability in the EU. The reason for this range can be attributed to prevailing differences in culture in the Member States along with differing definitions of disability, leading to inaccurate measurements.

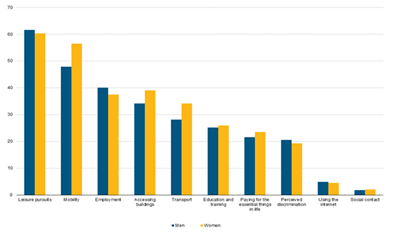

The European health and social integration survey (EHSIS 2012b) presents an overview of the special assistance needed by people with disabilities aged 15 and over in the EU, in order to subjugate limitations or barriers. The data shows the areas in which these people face barriers regarding social participation. As shown in Figure 1, leisure pursuits is the area where the largest proportion of people with disabilities reported limitations.

Figure 1. Share of disabled persons aged 15 and over reporting a disability in the specified life areas, by sex, EU-27, 2012 (as a % of persons reporting a disability in at least one area)

The report includes a classification according to “a health component to identify long-standing health problems and activity difficulties” (EHSIS 2012b: 1). Based on a medical model, this audience classification is the standard approach in experimental Audiovisual Translation research (Agulló et al. 2018, Orero and Tor-Carroggio 2018, Tor-Carroggio and Orero 2019). Research in this field is performed in two independent silos dealing with two accessibility services — subtitling and audio description for people with hearing loss and sight loss respectively (Agulló et al. 2018). In the former, research has departed from a variable number of deaf and hard of hearing users. Studies in same language subtitles started with Neves (2007) and Arnáiz-Uzquiza (2012) who defined the target audience as people with hearing loss. The same population is studied in experiments by Romero-Fresco (2009, 2010, 2013, 2015), Bartoll (2004, 2008, 2012), Bartoll and Martínez-Tejerina (2010), Perego et al. (2010), Pereira (2010), Szarkowska (2011), Szarkowska et al. (2011 and 2016), Miquel-Iriarte (2017) and Tsaousi (2017). All these studies have the same objective: understanding same language subtitle processing that has been created for people with hearing loss. The variables go from the reproduction speed, the subtitle position and the format. None of the previous studies tested the participants’ reading ability, nor how the semantic value of words may determine comprehension. The other popular media accessibility service is audio description. In this case the service targets persons with sight loss. Audio description (AD) deals with sound track perception and seeks to convey and compliment the message of the audiovisual text. This complex audio narrative is at the centre of studies by Udo and Fels (2009), Szarkowska (2011), Chmiel and Mazur (2012a), Szarkowska and Jankowska (2012), Fryer and Freeman (2012, 2014), Romero-Fresco and Fryer (2013), Fresno et al. (2014), Walczak and Rubaj (2014), Szarkowska and Wasylczyk (2014), Fernández-Torné and Matamala (2015), Walczak and Fryer (2017, 2018), and three experimental PhD dissertations: Cabeza-Cáceres (2013), Fryer (2013), and Walczak 2017 (framed within the EU-funded project HBB4ALL1). Other research results from large projects such as DTV4ALL2, ADLAB3, the Pear Tree Project (Orero 2008, Chmiel and Mazur 2012b), AD-Verba (Chmiel and Mazur 2011) and OpenArt (Szarkowska et al. 2016). In all of these studies the objective is to understand the reception of audio description as different stimuli are presented. The variables go from the narration speed, the intonation, the explicitation, the enjoyment and the use of synthetic voices, among other. None of the previous studies tested the participants’ hearing ability. The target audience is persons with sight loss; however, the final audio description service is an audio track, which relates more to hearing issues than sight issues.

There is no dispute that accessibility services are developed for people with disabilities. However, Audiovisual Translation studies on Media Accessibility still use medical conditions towards profiling their audience demographics: hearing loss or sight loss. Neither subtitling nor audio description will restore the hearing or sight of its audience. In the study of reading subtitles, the focus has been on reading skills. Profiling should be based on reading for legibility, readability and comprehension. To date, comprehension of reading subtitles has been related to the semantic complexity of words or concepts and is an interesting avenue for further research and development (Perego et al. 2010). Research on reading speed or live subtitling delay are measured according to the number of characters per second or words per minute. These measurements take syntax and the average length of words into consideration as they are language dependent, as opposed to dependent on the reception of the content. This was the case in a study by González-Iglesias and Martínez Pleguezuelos (2010) on gender violence in institutional TV adverts which provide accessibility services in Spain. Profiling people with hearing loss as the target group for gender violence and offering verbatim subtitles with the associated reading speed may lead to the belief that only people with hearing loss are in need of help and advice related to gender violence. Verbatim subtitles are an institutional requirement of hearing loss associations.

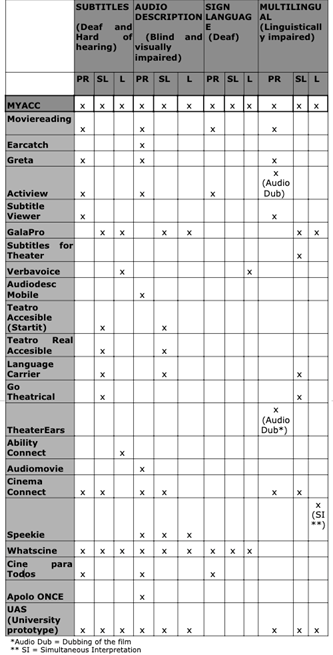

This traditional, medical audience classification has led to the design of solutions addressed to either one disabled group or the other (Sánchez Sierra and Selva Roca de Togores 2012). This can be seen in the table below (Table 1) where 23 apps have been organised according to the access service they provide and by service distribution: live, pre-recorded or recorded conditions.

Table 1. Existing media accessibility apps according to services

If we take a closer look at the apps described in the table that deliver pre-recorded audiovisual (AV) content, seven offer multilingual subtitles and eight offer subtitles for the deaf and hard of hearing (SDH). It should be highlighted that not all apps offer both types of subtitles. While the former usually provides subtitles in at least two different languages, the latter offers subtitles that include non-verbal information and the use of colours, which aid character identification. Regarding ADs of pre-recorded AV content, 11 apps cover this service, three apps provide sign language and one app offers dubbing. Out of all the apps, six include multilingual subtitles, SDH or AD whereas only Moviereading integrates all three services. This again shows audience segmentation through clinical requirements versus communicative requirements. Designed technology has focused on accessibility services that cater to the needs of users with disabilities, instead of approaching a wider notion of end-user capabilities (Agulló et al. 2018, Orero and Tor-Carroggio 2018).

Regarding semi-live events, nine apps offer SDH and six offer multilingual subtitles. Regarding ADs, 10 apps have it covered, eight of which integrate two main services: SDH and audio-description. Only one app provides sign language. In terms of live events, we find a clear requirement for apps that will provide all three accessibility services. Only five apps integrate SDH and none provide live interlingual subtitles. In live events the most commonly used techniques are respeaking and typing (Oncins 2014). In the case of AD, the service is covered by four of the apps.

It is important to mention that three apps offer sign language, namely Moviereading, Actiview and Whatscine, of which only the last offers the service in semi-live and live conditions. This may be due to the fact that the streaming of videos is subject to latency problems, which continue to be a major challenge for the audiovisual industry. The introduction of 5G connections in the near future might be a major step towards problem mitigation if not a solution.

In terms of technologies used to synchronise and deliver accessibility services, there are two main trends; the first, the integration of artificial intelligence (AI) technology, which improves speech recognition, i.e. Whatscine is used in semi-live events to automatically synchronise the AV content with the accessibility services. It is important to highlight that in semi-live events, accessibility contents are initially uploaded to the platform and then delivered manually. The use of AI technology is employed to automate this delivery process. Still, some of the main challenges in terms of synchronisation and voice recognition are presented when actors skip lines from the scripts, make improvisations, increase the speed, alter the prosody, have strong accents, use slang or when there is background noise.

The second notable trend is an increase in the use of the audio channel in addition to the AD service, i.e. dubbing or voice over, offered by GalaPro for live and semi-live events, and TheaterEars for pre-recorded events. Speekie and GalaPro offer live AD services for conferences and other types of events for which there is no script available. Speekie also allows live commentary and real-time translation at conferences and speeches.

Interestingly, some of the apps that include multi-language subtitles offer services beyond the scope of assistive technologies, such as alternative content related to the AV content, in order to appeal to a broad target audience. However, apps covering SDH and ADs are limited to content which exclusively relates to the available performances that provide accessibility services.

3. From Assistive Technologies to Design for All

As observed in the previous section, to date, most apps intended for the use of accessibility services following the medical model of disability are categorised as assistive technologies (Orero and Tor-Carroggio 2018). The UN World Health Organisation published the International Classification of Functioning, Disability and Health (ICF) in 2001. The ICF was intended to complement its sister classification system, the International Classification of Diseases (ICD) (Peterson and Elliott 2008). The ICF Model sees disability as the complex context of an individual, taking institutional and societal factors into account (Dubois and Trani 2009). It is operationalised through the World Health Organization Disability Assessment Schedule II (WHODAS II) and offers a taxonomy of disabilities that serves as little more than a classification (Dubois and Trani 2009). Ellis (2016) pointed out the underlying difference between disability and impairment, and the need for an understanding of end user capabilities, beyond disability. Confronting the limitations of existing approaches leads to the mainstreaming of accessibility and the inclusion of user profiles beyond persons with disabilities. As pointed out by Agulló et al., “The lack of capabilities linked to disability may have less impact than the lack of capabilities linked to other aspects such as technology” (2018: 198). This concept has also been suggested as the way forward by the UN agency International Telecommunication Union (ITU):

Besides the more commonly used “medical model of disability”, which considers disability “a physical, mental, or psychological condition that limits a person’s activities”, there is a more recent “social model of disability”, which has emerged and is considered a more effective or empowering conceptual framework for promoting the full inclusion of persons with disabilities in society. Within the social model, a disability results when a person who (a) has difficulties reading and writing; (b) attempts to communicate, yet does not understand or speak the national or local language, and (c) has never before operated a phone or computer and attempts to use one – without success. In all cases, disability has occurred, because the person was not able to interact with his or her environment. (2017:2)

The ITU recommends leaving behind the clinical model of disability and to embrace a social model. Some research results in Audiovisual Translation have already indicated the need for change in audience classification. Romero-Fresco’s (2015) findings on reading subtitles linked readability to educational background rather than to their hearing impairment. Miquel-Iriarte (2017), Neves (2018) and Agulló et al. (2018) also raised the issue, beyond the audience hearing needs, moving “from accessibility to usability for diverse audiences” (Agulló et al. 2018: 198). In the same line, AD can be beneficial for a much broader audience than the visually impaired. It has proven to be effective for language learning, writing skills development and improvement of learning outcomes (Jankowska 2019, Walczak 2016).

The need for a comprehensive tool, designed for accessing cultural events in live conditions from a capability approach within Design for All principles was the context for MyAcc, the new inclusive multiservice app.

4. MyAcc

The departure point for the development of MyAcc had previous shortcomings regarding the live delivery of accessibility services, mainly relating to cost. A precursor for MyAcc was developed at Universitat Autònoma de Barcelona (UAB) in an attempt to deliver accessibility during live lectures, institutional academic acts, theatre performances and movies screened at the cinema. According to the UAB figures from 20194, the university has an average of 30,000 students per year including undergraduate, master and lifelong learning students. In turn UAB has an average of 4,000 foreign students. Teaching and institutional acts could be delivered in any of the three official UAB languages: Catalan, English and Spanish. This situation creates real demand for language accessibility, where profiling regarding numbers shifts from people with sensorial disabilities to people with language disabilities. UAB started an active accessibility campaign, organising accessible activities and purchasing state of the art equipment for the production, distribution and delivery of said activities (Oncins et al. 2013). The outcome of this accessibility campaign lead to the opening of a campus Accessibility Service that is also responsible for the production of accessibility service content. The price of the production of content in three languages posed concerns about its financial viability, leading to alternative production avenues, such as hiring external providers. As soon as one space was made accessible, other spaces requested accessibility, which created additional funding issues and the need for a solution for all on-campus UAB spaces. This lead to further scrutiny of the accessibility delivery system, due to it being independent of the venue equipment. Taking this into account, requirements were defined using a new, communicative capabilities classification of user profile, departing from the medical model. The new app was designed to fulfil four main principles:

- Inclusive. The Digital Revolution changed the way people interact with audiovisual content. According to the European Commission (2018), the media landscape has shifted dramatically in less than a decade. Millions of Europeans, especially young people, watch content online, on demand and on a range of mobile devices. In fact, the EU updated Audiovisual Media Services Directive 2018/1808 in line with the international standard on subtitling, ISO/IEC DIS 20071-23 (International Organization for Standardization, 2018), provides a much broader identification of subtitle users; including D/deaf and hard-of-hearing, persons with learning difficulties or cognitive disabilities, non-native speakers, people who cannot hear the audio content due to environmental conditions, or circumstances where the sound is not accessible (e.g. noisy surroundings), the sound is not available (e.g. muted, speakers are not working), or the sound is not appropriate (e.g. a quiet library) (Romero-Fresco 2018: 188). As stated before, it opens a niche market for accessibility services, away from disability and focusing on communication, with sound-sensitive environments or languages at its core. It is also important to acknowledge that for real-time events, MyAcc can be used in an inclusive way by allowing content creators to upload real-time transmedia content on to the platform, and deliver it to the audience as a second screen in order to enhance audience engagement and produce an optimal user experience. In a nutshell, MyAcc aims to offer a service for all communication needs and contexts.

- Non-Invasive. MyAcc does not need the installation of any additional, dedicated hardware (magnetic loops, additional screens for dubbing or sign language, etc.) in order to enable accessibility services at an event. MyAcc is based on: 1) a cloud service for the data delivery (operational with any Internet connection such as WiFi, 3G/4G or soon to arrive 5G), 2) a computer for the content generation and management and 3) end-users’ smartphones for reception.

- Integrated. The MyAcc value proposition is based on combining a real-time transmedia application for real-time content delivery with a solution dedicated to accessibility services for broadcasters. It opens up the opportunity to integrate all media content delivery into a single tool/platform for accessibility, from real-time to on-demand services. Media content should only be produced once (subtitles, audio description, sign language and even real-time translation) and should be resilient.

- Cost-effective: MyAcc is an inclusive service, it significantly increases the number of potential clients that will consume the service, thus reducing the cost per potential user. Since it is a non-invasive service, it requires no investment. It has no associated installation or hardware requirements. It is based on Software as a service (SaaS) model, adapted to client requirements with different licences. Given the fact that no dedicated hardware is required, there are no associated depreciations or maintenance. And finally, as it is an integrated service, it reduces the cost of generating different accessibility content for each specific distribution channel.

Within this context, the app was designed to integrate all accessibility services in a single web platform with a vision beyond disability. Two conditions required by the app are defined as follows: the environment where the media content is consumed and the alternative communication of the visual, audio, and linguistic components of the media content. The integration of technologies such as Text-to-Speech, Speech-to-Text and Machine Translation has been considered part of the alternative communication, catering to the needs of an increasingly diverse audience. Finally, the full accessibility chain has been taken into account according to the Design for all principles.

4.1. All services on one platform

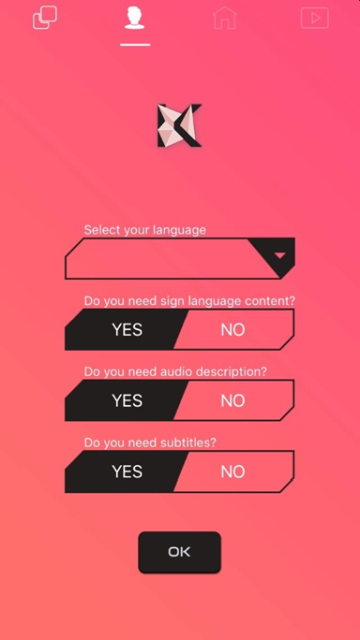

MyAcc is a digital solution for transmedia storytelling and personalised communication. It works for one person and very large audiences. MyAcc is able to deliver in real-time, both for live and pre-recorded audio, video, texts, and images. Content delivery is personalised according to user profiling and communicative needs (see Figure 2).

Figure 2. Available services included in the app

The use of the personal smartphone and its capabilities implies no hardware dependency. The use of the personal smartphone removes the need for the end user to learn any functionality. User interaction is intuitive through an accessible menu whereby the services are chosen. The following accessibility services are included:

- Multi Language

- Subtitles

- Audio description and audio introduction

- Audio subtitles

- Easy-to-read (subtitles)

- Sign language

- Audio description with audio subtitles

More than one service can be selected from the menu without interfering with the needs of the user. In the same auditorium, different users have different needs and/or more than one need. For instance; if a user selects English language, subtitles and audio description, the delivered services will be audio description and audio subtitles in English. A second user can select Spanish language and subtitles and in doing so the delivered service will be in Spanish. It addresses the coverage of a wide notion of accessibility services:

- Subtitles and Sign Language Interpretation: to duplicate the dialogue or sound communication channels. The subtitles have been adapted to both the context in which the media is consumed (public spaces or noisy environments) and the audience: for the Deaf\deaf and Hard of Hearing, newcomers, the elderly, people with learning disabilities, people who may find reading a challenge for any reason.

- Audio description, audio introduction and audio subtitles: to duplicate the visual communication channels. The services are delivered through clients’ headphones. Regarding the environment, these services offer alternative spoken communication for the visual channels, taking into account the subtitles or any written content. The app allows for both audio description and audio subtitles to play simultaneously.

- Easy-to-read subtitles and audio descriptions: accessibility services where fast and efficient communication is required. These are suitable for environments where media content is expected to be consumed at a rapid pace, or for audiences who require simplified communication. Recent reception studies in the field of Easy-to-read subtitles (Oncins et al. forthcoming), report the benefits of easy-to- read subtitles for the aged with and without hearing loss.

The new heterogeneity of the audience profile and audience attitudes demands new approaches in Media Accessibility. In addition, new consumer attitudes have to be taken into account. Data show that 80% of people that use subtitles are not deaf and use “common accessibility tools” to overcome problems relating to their environments. Furthermore, voice recognition services are expanding to new horizons, primarily aimed at the blind and people with sight-loss. It could be stated that voice and voice-enabled devices are increasing at a fast rate and voice-activated speakers have become part of people’s routines (Kleinberg 2018). The use of these new technologies is expected to increase in the coming years and the human-computer interaction (HCI) through voice recognition will become normalised. It is therefore expected from an end-user point of view that speaking and receiving audio content from an app will be accepted. This further extends the accessibility to a broader audience.

5. Conclusions and further research

Attending a cultural event starts before the audience is seated. Buying the ticket and finding available accessible transport to and from the venue are both steps in the process towards a fully accessible experience. How to get to the assigned seat may also be a challenge. According to Universal Design or Design for All principles, the accessibility chain starts when people make the decision to attend a venue, followed by how to get to the venue, the experience itself, a safe return home and subsequent feedback to allow end-users to provide their insights into the experience. If any element in the chain is broken then accessibility cannot be considered as granted (Orero 2017).

MyAcc has been conceived to create new communication channels for the audience and to enable interaction. It allows interaction based on real-time multimedia content messaging. It also allows the content creator to send and personalise multimedia messages — audio, video, image, text — to any device connected to the Internet via the mobile app. This allows venues to engage with audiences and get instant feedback from both inside and outside the venue, filtered by the preferences or needs of each end-user in the audience, creating a unique experience.

MyAcc has the potential to go beyond offering accessibility services and can be developed further to obtain data from end-users sitting in the auditorium. Gathering relevant feedback from end-users and adapting the services to target other audience needs in an inclusive approach may pave the way for the creation of new services.

MyAcc has been conceived under the principles of Design for All, looking at accessibility as alternative communication in a given environment. MyAcc aims at rethinking the accessibility chain and integrating all accessibility services in a single platform. It will offer the venues a new channel, allowing better communication and a better understanding of the diverse needs of their audiences, in a more inclusive and engaging way. It will provide the users with a new tool with which to enjoy cultural media content.

Acknowledgements

This work has been partially funded by the H2020 EasyTV H2020-ICT-2016-2 761999, TRACTION H2020-SC6-TRANSFORMATIONS-870610, ERASMUS+ LTA 2018-1-DE01-KA203-004218, ERASMUS+ EASIT 2018-1-ES01-KA203-05275, RAD PGC2018-096566-B-I00, IMPACT 2019-1-FR01-KA204-062381 and 2019SGR113.

References

- Agulló, Belén, Anna Matamala and Pilar Orero (2018). “From Disabilities to Capabilities: testing subtitles in immersive environments with end users.” HIKMA 17, 195-220.

- Arnáiz-Uzquiza, Verónica (2012). “Los parámetros que identifican el Subtitulado para Sordos. Análisis y clasificación.” Rosa Agost, Pilar Orero and Elena Di Giovanni (eds) (2012). Multidisciplinarity in Audiovisual Translation/Multidisciplinarietat en traducció audiovisual. MonTI 4, 103-132.

- Bartoll, Eduard (2004). “Parameters for the classification of subtitles.” Pilar Orero (ed.) (2004). Topics in Audiovisual Translation. Amsterdam/Philadelphia: John Benjamins, 53-60.

- Bartoll, Eduard (2008). Paràmetres per a una taxonomia de la subtitulació. PhD thesis. Universitat Pompeu Fabra.

- Bartoll, Eduard (2012). La subtitulació: Aspectes teòrics i pràctics. Barcelona: Eumo.

- Bartoll, Eduard and Martínez-Tejerina, Anjana (2010). “The positioning of subtitles for the deaf and hard of hearing.” Anna Matamala and Pilar Orero (eds) (2010). Listening to Subtitles: Subtitles for the Deaf and Hard of Hearing. Bern: Peter Lang, 69-86.

- Cabeza-Cáceres, Cristóbal (2013). Audiodescripció i recepció. Efecte de la velocitat de narració, l’entonació i l’explicitació en la comprensió fílmica. PhD thesis. Universitat Autònoma de Barcelona.

- Chmiel, Agnieszka and Iwona Mazur (2011). “Overcoming barriers: The pioneering years of audio description in Poland.” Adriana Serban, Anna Matamala and Jean-Marc Lavaur (eds) (2011). Audiovisual Translation in Close-Up: Practical and Theoretical Approaches. Bern: Peter Lang, 279–296.

- Chmiel, Agnieszka and Iwona Mazur (2012a). “Audio description research: some methodological considerations.” Elisa Perego (ed.) (2012). Emerging topics in translation: Audio description. Trieste: EUT, 57-80.

- Chmiel, Agnieszka and Iwona Mazur (2012b). “Audio Description Made to Measure: Reflections on Interpretation in AD based on the Pear Tree Project Data.” Marry Carroll, Aline Remael and Pilar Orero (eds) (2012). Audiovisual Translation and Media Accessibility at the Crossroads. Media for All 3. Amsterdam/New York: Rodopi, 173-188.

- Casetti, Francesco and Sara Sampietro (2013). “With Eyes, with Hands: The Relocation of Cinema into the iPhone.” Pelle Snickars and Patrick Vonderau (eds) (2013). Moving Data: The iPhone and the Future of Media. New York: Columbia University Press, 19-30.

- Dubois, Jean-Luc and Jean-François Trani (2009). “Extending the capability paradigm to address the complexity of disability.” ALTER, European Journal of Disability Research 3, 192–218.

- European Commission (EC) (2018). Audiovisual Media Services Directive (AVMSD).https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=LEGISSUM%3Aam0005 (consulted 19.04.2020).

- European Commission (EC) (2019). European Accessibility Act.https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32019L0882&from=ES (consulted 19.04.2020).

- European Commission (EC) (2018). Digital Single Market: updated audiovisual rules. http://europa.eu/rapid/press-release_MEMO-18-4093_en.htm (consulted 19.04.2020).

- European Health and Social Integration Survey (EHSIS) (2012a). Disability statistics - need for assistance. https://ec.europa.eu/eurostat/statistics-explained/pdfscache/34419.pdf (consulted 19.04.2020).

- European Health and Social Integration Survey (EHSIS) (2012b) Disability statistics - barriers to social integration.https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Archive:Disability_statistics_-_barriers_to_social_integration (consulted 19.04.2020).

- Ellis, Gerry (2016). “Impairment and Disability: Challenging Concepts of Normality.” Anna Matamala and Pilar Orero (eds) (2016). Researching Audio Description. London: Palgrave Macmillan, 35-45.

- Fernández-Torné, Anna and Anna Matamala (2015). “Text-to-speech versus human voiced audio description: a reception study in films dubbed into Catalan.” The Journal of Specialised Translation 24, 61-88.

- Fresno, Nazaret, Judit Castellà and Olga Soler-Vilageliu (2014). “Less is more. Effects of the amount of information and its presentation in the recall and reception of audio described characters.” International Journal of Sciences: Basic and Applied Research 14(2), 169-196.

- Fryer, Louise and Jonathan Freeman (2012). “Cinematic language and the description of film: keeping AD users in the frame.” Perspectives 21(3), 412-426.

- Fryer, Louise and Jonathan Freeman (2014). “Can you feel what I’m saying? The impact of verbal information on emotion elicitation and presence in people with a visual impairment.” Anna Felnhofer and Oswald D. Kothgassner (eds) (2014). Challenging Presence: Proceedings of the 15th international conference on presence. Vienna: Facultas.wuv, 99-107.

- Fryer, Louise (2013). Putting it into words: the impact of visual impairment on perception, experience and presence. PhD thesis. London: University of London.

- González-Iglesias González, David and José A. Martínez Pleguezuelos (2010). “Gender Violence Campaigns and Accessibility to Information in the Media.” Martin Kažimír (ed.) (2010). Kontexty. Interdisciplinárny zborník. Gorlice: Elpis, 237-249.

- Hessels, Scott (2017). “Planted Movies: Site-Embedded Film Distribution.” Paper presented at Circuits of Cinema (Ryerson University, 21-24 June 2017).

- International Organization for Standardization (2018). Information technology –

user interface component accessibility– Part 23: Guidance on the visual presentation of audio information (including captions and subtitles) (ISO/IEC DIS 20071-23: 2018). https://www.iso.org/standard/70722.html (consulted 17.04.2020) - International Telecommunication Union (ITU) (2017). Question 7/1: Access to telecommunication/ICT services by persons with disabilities and with specific needs. https://www.itu.int/dms_pub/itu-d/opb/stg/D-STG-SG01.07.4-2017-PDF-E.pdf (consulted 17.04.2020)

- Jankowska, Anna (2019). “Accessibility Mainstreaming and Beyond – Senior Citizens as Secondary Users of Audio Subtitles in Cinemas.” International Journal of Language, Translation and Intercultural Communication 8, 28-47.

- Kleinberg, Sara (2018). “5 ways voice assistance is shaping consumer behaviour.” Think with Google, March. https://www.thinkwithgoogle.com/intl/en-apac/trends-and-insights/voice-assistance-consumer-experience/ (consulted 19.04.2020).

- Linke-Ellis, Nancy (2012). “Accessibility for Digital Cinema.” SMPTE Motion Imaging Journal 121(6), 90-94.

- Neves, Josélia (2007). “There is Research and Research: Subtitling for the Deaf and Hard of Hearing (SDH).” Catalina Jiménez Hurtado (ed.) (2007). Traducción y accesibilidad. Subtitulación para sordos y audiodescripción para ciegos: nuevas modalidades de Traducción Audiovisual. Frankfurt: Peter Lang, 27-40.

- Neves, Josélia (2018).“Subtitling for Deaf and Hard of Hearing Audiences. Moving forward.” Luís Perez-González (ed.) (2018). The Routledge Handbook of Audiovisual Translation. London and New York: Routledge: 82-95.

- Miquel-Iriarte, Marta (2017). The reception of subtitling for the deaf and hard of hearing: viewers’ hearing and communication profile & Subtitling speed of exposure. PhD thesis. Universitat Autònoma de Barcelona.

- Oncins, Estella (2014). Accessibility for the scenic arts. PhD thesis. Universitat Autònoma de Barcelona.

- Oncins, Estella et al. (2013). “All Together Now: A multi-language and multi-system mobile application to make live performing arts accessible.” The Journal of Specialised Translation 20, 147-164.

- Oncins, Estella et al. (forthcoming). “Accessible scenic arts and Virtual Reality: A pilot study in user preferences when reading subtitles in immersive environments.” Mabel Richart-Marset and Francesca Calamita (eds) (provisional title). Traducción y Accesibilidad en los medios de comunicación: de la teoría a la práctica. MonTI 12.

- Orero, Pilar (2017). “The professional profile of the expert in media accessibility for the scenic arts.” Rivista internazionale di Tecnica della Traduzione RIIT 19, 143-161.

- Orero, Pilar (2008). “Three different receptions of the same film. The Pear Stories applied to Audio Description.” European Journal of English Studies 12(2), 179–193.

- Orero, Pilar and Irene Tor-Carroggio (2018).“User requirements when designing learning e-content: interaction for all.&rdqu0000000o; Evangelos Kapros and Maria Koutsombogera (eds) (2018). Designing for the User Experience in Learning Systems. Berlin: Springer, 105-122.

- Perego, Elisa et al. (2010). “The Cognitive Effectiveness of Subtitle Processing.” Media Psychology 13(3), 243–272.

- Pereira, Ana (2010). “Criteria for elaborating subtitles for the deaf and hard of hearing adults in Spain: Description of a case study.” Anna Matamala and Pilar Orero (eds) (2010). Listening to Subtitles. Subtitles for the Deaf and Hard of Hearing. Vienna: Peter Lang, 87-102.

- Peterson, David B. and Timothy R. Elliott (2008). “Advances in conceptualizing and studying disability.” Steven Brown and Robert Lent (eds) (2008). Handbook of Counseling Psychology. New Jersey: Wiley, 212-230.

- Romero-Fresco, Pablo (2009). “More haste less speed: edited versus verbatim respoken respeaking.” Vigo International Journal of Applied Linguistics 6,109–133.

- Romero-Fresco, Pablo (2010). “D’Artagnan and the Seven Musketeers: SUBSORDIG travels to Europe.” Anna Matamala and Pilar Orero (eds) (2010). Listening to Subtitles. Subtitles for the Deaf and Hard of Hearing. Vienna: Peter Lang, 175-189.

- Romero-Fresco, Pablo (2013). “Accessible filmmaking: Joining the dots between audiovisual translation, accessibility and filmmaking.” The Journal of Specialised Translation 20, 201-223.

- Romero-Fresco, Pablo (2015).“The Reception of Subtitles for the Deaf and Hard of Hearing in Europe.” Pablo Romero-Fresco (ed.) (2015). The Reception of Subtitles for the Deaf and Hard of Hearing in Europe. Bern: Peter Lang, 350-353.

- Romero-Fresco, Pablo (2018). “In support of a wide notion of media accessibility: Access to content and access to creation.” Journal of Audiovisual Translation 1(1), 187-204.

- Romero-Fresco, Pablo and Louise Fryer (2013). “Could audio described films benefit from audio introductions? A reception study with audio description users.” Journal of Visual Impairment and Blindness 107(4), 287-295.

- Sánchez Sierra, Javier and Joaquín Selva Roca de Togores (2012). “Designing Mobile Apps for Visually Impaired and Blind Users.” Leslie Miller and Silvana Roncaglio (eds) (2012). Proceedings of Fifth International Conference on Advances in Computer-Human Interactions ACHI 2012. Valencia: IARIA, 47-52.

- Szarkowska, Agnieszka (2011). “Text-to-speech audio description: towards wider availability of audio description.” The Journal of Specialised Translation 15, 142-163.

- Szarkowska, Agnieszka et al. (2011). “Verbatim, standard, or edited? Reading patterns of different captioning styles among deaf, hard of hearing, and hearing viewers.” American Annals of the Deaf 156(4), 363-378.

- Szarkowska, Agnieszka and Anna Jankowska (2012). “Text-to-speech audio description of voice-over films. A case study of audio described Volver in Polish.” Elisa Perego (ed.) (2012). Emerging topics in translation: Audio description. Trieste: EUT, 81-98.

- Szarkowska, Agnieszka and Piotr Wasylczyk (2014). “Audiodeskrypcja autorska.”Przekładaniec 28, 48-62.

- Szarkowska, Agnieszka et al. (2016). “Open Art: Designing Accessible Content in a Multimedia Guide App for Visitors with and without Sensory Impairments.” Anna Matamala and Pilar Orero (eds) (2016). Researching Audio Description. London: Palgrave Macmillan, 301-320.

- Tor-Carroggio, Irene and Pilar Orero (2019). “User profiling in audio description reception studies: questionnaires for all.” IntraLinea21.

- Tsaousi, Aikaterini (2017). Sound effect labelling in subtitling for the Deaf and Hard-of-Hearing: An experimental study on audience reception. PhD Thesis. Universitat Autònoma de Barcelona.

- Udo, John-Patrick and Deborah Fels (2009). “Suit the action to the word, the word to the action. An unconventional approach to describing Shakespeare’s Hamlet.” Journal of Visual Impairment and Blindness 103(3), 178-183.

- United Nations (2006). The Convention on the Rights of Persons with Disabilities and its Optional Protocol (A/RES/61/106). https://www.un.org/development/desa/disabilities/convention-on-the-rights-of-persons-with-disabilities.html (consulted 24/04/2020).

- Walczak, Agnieszka (2016). “Foreign Language Class with Audio Description: A Case Study.” Anna Matamala and Pilar Orero (eds) (2016). Researching Audio Description. London: Palgrave Macmillan, 187–204.

- Walczak, Agnieszka (2017). Immersion in audio description. The impact of style and vocal delivery on users’ experience. PhD thesis. Universitat Autònoma de Barcelona.

- Walczak, Agnieszka and Louise Fryer (2017). “Creative description. The impact of audio description style on presence in visually impaired audiences.” British Journal of Visual Impairment 35(1), 6-17.

- Walczak, Agnieszka and Louise Fryer (2018). “Vocal delivery of audio description by genre.” Perspectives 26(1), 69-83.

- Walczak, Agnieszka and Maria Rubaj (2014). “Audiodeskrypcja na lekcji historii, biologii i fizyki w klasie uczniów z dysfunkcja wzroku.” Przekładaniec 28, 63-79.

- Weinel, Jonathan and Stuart Cunningham (2015). “Second Screen Comes to the Silver Screen: A Consumer Study Regarding Mobile Technologies in the Cinema.” Paper presented at 6th International Conference Internet Technologies and Applications (ITA) (Glyndwr University, 8-10 September 2015).

Websites

- Ability Connect https://abilityconnect.ua.es (consulted 17.04.2020)

- Actiview https://actiview.co/en/app (consulted 17.04.2020)

- Apolo ONCE https://apps.apple.com/es/app/apolo-once/id1282988384 (consulted 17.04.2020)

- AudiodescMobile https://apps.apple.com/es/app/audescmobile/id705749595 (consulted 17.04.2020)

- Audiomovie https://apps.apple.com/us/app/audiomovie/id1195796936 (consulted 17.04.2020)

- CinemaConnect https://assets.sennheiser.com/global-downloads/file/6811/CinemaConnect_Broschuere-EN_Sennheiser.pdf (consulted 17.04.2020)

- Cine para Todos https://cineparatodos.gov.co/671/w3-propertyvalue-34249.html (consulted 17.04.2020)

- Earcatch https://earcatch.co.uk (consulted 17.04.2020)

- Go Theatrical https://theatrecaptioning.com.au/download-gotheatrical-app (consulted 17.04.2020)

- GalaPro https://www.galapro.com/#galapro-app (consulted 17.04.2020)

- Greta http://www.gretaundstarks.de/greta (consulted 17.04.2020)

- Language Carrier http://www.languagecarrier.es/download-app.html (consulted 17.04.2020)

- Moviereading https://www.moviereading.com/#home (consulted 17.04.2020)

- Speekie http://www.accessibility-service.info/speekie (consulted 17.04.2020)

- Teatro Accesible (Startit) https://aptent.es/startit (consulted 17.04.2020)

- Teatro Real Accesible https://play.google.com/store/apps/details?id=es.sdos.teatroreal&hl=en (consulted 17.04.2020)

- TheaterEars https://www.theaterears.com/english (consulted 17.04.2020)

- Subtitle Viewer http://www.subtitleviewer.com (consulted 17.04.2020)

- Subtitles for Theater https://www.subtitlesfortheatre.com (consulted 17.04.2020)

- Verbavoice https://www.verbavoice.de (consulted 17.04.2020)

- Whatscine http://www.whatscine.es (consulted 17.04.2020)

Biographies

Estella Oncins holds a Phd in Accessibility and Ambiental Intelligence from Universitat Autònoma de Barcelona, Spain. She has a large experience in providing accessibility for live events as a freelance translator, subtitler, surtitler, respeaker for different Spanish televisions and conferences, and as an audiodescriber for Liceu Opera House. Her research areas are audiovisual translation, media accessibility and creative industry. She is currently involved in the Education and Outreach Working Group (EOWG) from W3C. She is a partner in KA2 LTA and IMPACT. She is also a partner in H2020: HELIOS and TRACTION.

Estella Oncins holds a Phd in Accessibility and Ambiental Intelligence from Universitat Autònoma de Barcelona, Spain. She has a large experience in providing accessibility for live events as a freelance translator, subtitler, surtitler, respeaker for different Spanish televisions and conferences, and as an audiodescriber for Liceu Opera House. Her research areas are audiovisual translation, media accessibility and creative industry. She is currently involved in the Education and Outreach Working Group (EOWG) from W3C. She is a partner in KA2 LTA and IMPACT. She is also a partner in H2020: HELIOS and TRACTION.

Email: estella.oncins@uab.cat

Pilar Orero PhD (UMIST) teaches in the Faculty of Political Science at Universitat Autònoma de Barcelona, Spain. She is a member of the research group TransMedia Catalonia. She is the leader of numerous research projects funded by the Spanish and Catalan Governments related to media accessibility. She participates in UN agency ITU Intersector Rapporteur Group Audiovisual Media Accessibility IRG AVA and ITU-D creating an online course on Media Accessibility. She participates in ISO on standards related to media accessibility. She holds the INDRA Accessible Technologies Chair since 2012 and leads the EU projects HBB4ALL (2013-2016), KA2 ACT (2015-2018) and is a partner in KA2 ADLAB PRO, EASIT, LTA and IMPACT. She is also a partner in H2020: Easy TV, ImAc, HELIOS, REBUILD, TRACTION and SOCLOSE. Website: http://gent.uab.cat/pilarorero/

Pilar Orero PhD (UMIST) teaches in the Faculty of Political Science at Universitat Autònoma de Barcelona, Spain. She is a member of the research group TransMedia Catalonia. She is the leader of numerous research projects funded by the Spanish and Catalan Governments related to media accessibility. She participates in UN agency ITU Intersector Rapporteur Group Audiovisual Media Accessibility IRG AVA and ITU-D creating an online course on Media Accessibility. She participates in ISO on standards related to media accessibility. She holds the INDRA Accessible Technologies Chair since 2012 and leads the EU projects HBB4ALL (2013-2016), KA2 ACT (2015-2018) and is a partner in KA2 ADLAB PRO, EASIT, LTA and IMPACT. She is also a partner in H2020: Easy TV, ImAc, HELIOS, REBUILD, TRACTION and SOCLOSE. Website: http://gent.uab.cat/pilarorero/

Email: pilar.orero@uab.cat

Notes

Note 1:

HBB4ALL, Hybrid Broadcast Broadband for All, was a EU-funded research project (2013-2016). The project aimed at making cross-platform production and distribution of accessibility features more cost-efficient and yet more flexible to use, and also easier to use.

Return to this point in the text

Note 2:

DTV4ALL, Digital Television for All, was a EU-funded research project (2008-2011). The project aimed at helping to provide accessible digital television programs across the European Union. https://cordis.europa.eu/project/id/224994 (consulted 19.04.2020)

Return to this point in the text

Note 3:

ADLAB, Audio description: Lifelong Access for the Blind (2011-2014) and ADLAB PRO Audio description: A Laboratory for the development of a new professional profile (2016-2019) were EU-funded Erasmus+ projects. ADLAB PRO was the natural evolution of the ADLAB project and aimed at identifying the inconsistencies in AD crafting methods and provision policies at European level and to produce the first reliable and consistent European guidelines for the practice of AD. http://www.adlabproject.eu/

(consulted 19.04.2020)

Return to this point in the text

Note 4:

A detailed description of the UAB in numbers for the academic course 2019-2020 is available online at: https://www.uab.cat/web/about-the-uab/the-uab/the-uab-in-figures-1345668682835.html (consulted 19.04.2020)

Return to this point in the text