The effect of subtitle speed and individual differences on subtitle processing in L1 and L2: an eye-tracking study on intralingual English subtitles

Agnieszka Szarkowska*1, University of Warsaw

Valentina Ragni**, University of Warsaw

Sonia Szkriba***, University of Warsaw

David Orrego-Carmona****, University of Warwick and University of the Free State

Sharon Black*****, University of East Anglia

ABSTRACT

The growing popularity of intralingual English subtitles in audiovisual content is transforming how both native and non-native English speakers engage with media. This trend has been accompanied by rising subtitle speeds, driven by global streaming platforms and social media, where a greater reliance on automation for subtitle creation often results in verbatim, excessively fast subtitles. This study investigates the impact of subtitle speed on L1-English and L2-English (L1-Polish) viewers, focusing on individual characteristics that impact subtitle processing. We tested 83 participants (33 L1 and 50 L2 speakers) while they watched English-language videos with intralingual subtitles. Eye movements were monitored to measure early and late stages of the reading process, word skipping, and proportional time spent in the subtitle area. Results indicate that L2 speakers were more affected by faster subtitles than L1 speakers: they spent more time in the subtitle area, skipped fewer words, and had lower recall accuracy. Additionally, factors such as age, working memory, previous exposure to audiovisual materials, and familiarity with subtitling were found to influence the viewing process. This paper presents the findings and assesses their implications for future subtitling research and practices.

KEYWORDS

TIntralingual subtitling, captions, eye tracking, subtitle speed, individual differences, working memory, age, recall, reading, L1, L2.

Introduction

In recent years, subtitle viewing habits have undergone a significant shift, leading to the emergence of new subtitling audiences. Subtitles are no longer used exclusively by viewers with hearing impairments or those unfamiliar with the language of a film. Recent research indicates that many people, especially Gen Z and millennials, actively choose to watch content with subtitles, even in countries where subtitling has traditionally been uncommon (Greenwood, 2023). Additionally, there is a growing tendency to watch videos on mobile devices with the sound muted in various settings (Szarkowska et al., 2024). These patterns are seen among both viewers watching content in their first language (L1) and those engaging with foreign content through intralingual subtitles in their second language (L2). Another notable trend in subtitling is the rise in subtitle speeds, driven by technological advancements, faster speech rates, and fast-paced editing in audiovisual productions (Szarkowska, 2016a). Together, these developments raise important questions about their potential impact on viewers.

This study explores how individual characteristics of both viewers and subtitles affect the reading process, where reading is intended as a highly complex cognitive skill involving at least “the identification of word forms, the retrieval of their meaning [and] subsequently the integration of that meaning in the context of the sentence, paragraph or story” (Cop et al., 2017, p. 747). The key variable at the subtitle level is speed, while at the viewer level, it is the participants’ language. Specifically, L1-English and L2-English (L1-Polish) speakers are tested while viewing intralingual English subtitles. Additional factors include subtitle length, number of lines, word frequency and word length. In terms of viewer characteristics, the present study explores working memory, age, education, watching habits, and familiarity with the video. The aim is to explore how these variables affect viewer interaction with subtitled videos, thus enhancing our understanding of contemporary viewing practices and their impact on subtitle reading and associated cognitive processing.

Subtitle speed

Subtitle speed, also known as reading speed or presentation rate, is usually measured in characters per second (CPS) or words per minute (WPM), and denotes the rate at which subtitles are displayed. Although research on subtitle speed dates back to the early 1980s (d'Ydewalle et al., 1985), interest in this topic and its impact on the industry continues to grow today (Kruger et al., 2022; Lång et al., 2021; Liao et al., 2021; Szarkowska & Gerber-Morón, 2018).

In the 1980s, d'Ydewalle et al. (1987) discussed the “six-second rule”, explaining that a two-line subtitle with 64 characters (32 per line) should be displayed for at least six seconds (roughly 10.5 CPS) for comfortable reading. Subtitle speeds have since increased significantly, leading to changes in current subtitling practices (Hagström & Pedersen, 2022). Technological advancements have enabled an increase in character limits per line, from 35 in traditional TV broadcasts to 42 on streaming platforms (Díaz-Cintas & Remael, 2021; Netflix, 2021). A key factor in this shift is Netflix’s openly available style guide, which contrasts with the previously less accessible guides of national television broadcasters (Pedersen, 2018). Currently, Netflix sets maximum speeds for English subtitles for adult viewers at 20 CPS (Netflix, 2020, 2021). Stipulations of maximum speed might be further relaxed, as indicated by Ofcom’s recent statement on changes to the Access Services Code and update of best practice guidelines on access services, proposing “to replace the reference to specific subtitle speeds with the principle that subtitling should generally be synchronised with the audio as closely as possible” (Ofcom, 2024, p. 33).

The rise in subtitle speed is also driven by automation and new subtitle-creation methods. Automatic speech recognition is now commonly used to generate synchronised transcripts and time codes. Fast speech rates in audiovisual content can lead to verbatim subtitles displayed at speeds exceeding even Netflix’s recommendations. Kruger and Liao (2023) highlight issues with “ghost titles”, where subtitles disappear too quickly to be read. Increased subtitle speed is also evident on social media, where subtitles are often generated automatically and displayed in various formats, not necessarily as entire blocks of text, but word by word or phrase by phrase. Thus, subtitle speed values that were once considered comfortable to read may need to be revisited.

Speed as categorical vs. continuous variable

Most reception studies have treated subtitle speed as a categorical variable, where all subtitles in a given condition are displayed at fixed speeds. For example, a study by Liao et al. (2021) and its subsequent replication by Szarkowska et al. (2024), used three speed conditions: 12, 20 and 28 CPS. This approach ensures methodological rigour by keeping speed constant to isolate its effect, but it lacks ecological validity. Style guides, such as Netflix (2021), only set a maximum speed, but actual subtitle speeds vary within the allowed range and, therefore, in real life when watching a film viewers experience a range of speeds. Building on Szarkowska et al. (2021), this study uses ‘actual speed’ as a continuous variable, reflecting typical viewer experiences and enabling fine-grained statistical analyses with linear mixed effects models (LMMs) (Meteyard & Davies, 2020). Until recently, eye-tracking studies treated subtitle speed categorically and relied on simpler statistical methods like t-tests and ANOVAs, which aggregate data across all subtitles and participants. Analysing speed as a continuous variable and employing LMMs enables analysis across multiple levels simultaneously, offering greater explanatory power and handling larger datasets more effectively (Silva et al., 2022).

Individual differences in subtitle reading

First language

Numerous studies have demonstrated the benefits of same-language subtitles for comprehension and vocabulary acquisition (for a recent review, see Montero Perez, 2022). However, differences in L1 and L2 processing remain understudied despite the increasing popularity of subtitled videos worldwide. Auditory challenges such as accents, intonation variations, and delivery nuances pose unique difficulties for L2 learners (Lee & Révész, 2020). L2 subtitles are valuable aids for visualising spoken words, clarifying ambiguous auditory input, and decoding speech into meaningful units (Winke et al., 2010; Wisniewska & Mora, 2020). However, concerns exist that L2 viewers may over-rely on subtitles, potentially hindering their engagement with the auditory channel (Danan, 2016; Mitterer & McQueen, 2009). L2 readers of both static text and subtitles have been found to make more and longer fixations, skip fewer words and read static texts more slowly compared to L1 readers (Conklin et al., 2020; Cop et al., 2015; Liao et al., 2022). These findings highlight nuanced differences in text and subtitle processing between L1 and L2 readers. Understanding these differences is crucial for improving subtitle design and enhancing the viewing experience for L2 learners.

Working memory

Working memory (WM) is considered to be “a temporary storage system under attentional control that underpins our capacity for complex thought” (Baddeley, 2007, p. 1). WM is understood to be involved in a wide range of complex cognitive behaviours, including comprehension and learning, and WM span tasks are deemed to be reliable and valid measures of WM capacity (Conway et al., 2005). Gass et al. (2019) investigated the impact of L2 learners’ WM capacities on their subtitle reading behaviour and comprehension of subtitled videos. They found that learners with a high WM capacity and high comprehension scores spent less time reading the subtitles and noted that subtitles may help neutralise some of the WM’s limiting effects on learning. Given these preliminary findings, the present study includes a measure of WM to assess whether and how WM differences affect L1/L2 subtitle reading.

Age

Although in recent years scholarly interest in investigating reading performance in age groups other than young adults has been growing, eye-movement research focusing on other ages is still scarce (Schroeder et al., 2015). Past research found that older readers (65+ years) tend to make more and longer fixations and skip words more often than young adults (Kliegl et al., 2004). Although the Kliegl study is over 20 years old, very few studies since then have used eye tracking to examine the role of age in subtitle reading. In rare exceptions, the subtitle reading behaviours of children, adolescents and adults were compared (d'Ydewalle & De Bruycker, 2007; Muñoz, 2017). Other studies like Koolstra, et al. (1999) examined solely children’s processing of subtitles displayed at three different speeds (L1 subtitles, L2 audio). However, it seems that no such study has yet examined older adults. Perego et al. (2015) investigated the cognitive and evaluative effects of viewing subtitled and dubbed videos in younger and older adults, but the study did not use eye tracking. Thus, more research is needed to investigate age-related differences in gaze behaviour when reading subtitles, focusing on different age groups across the lifespan, a gap we try to address in the present study.

Additional viewer-related characteristics

Experience with subtitling, education levels, and familiarity with experimental materials are often suggested to influence subtitle reading but are rarely controlled. Additionally, samples are usually so small and unbalanced that statistical tests might be underpowered.

Researchers typically try to prevent participants from having seen the videos used as stimuli in eye-tracking studies, so the impact of familiarity with the experimental materials is rarely explored. Winke et al. (2013) examined whether subject-matter familiarity affected how students of Arabic, Chinese, Spanish, and Russian read subtitles, finding a significant interaction only between Chinese language and subject-matter familiarity. Orrego-Carmona (2015) also investigated prior exposure to the study videos, revealing an interaction between English proficiency and content knowledge, which affected self-reported comprehension and subtitle-reading effort, but not eye-tracking measurements.

Moreover, analysing the impact of experience or familiarity with subtitling is essential to understanding audience engagement with subtitled content. In 1991, d’Ydewalle et al. examined intralingual subtitles, suggesting that long-term exposure to subtitling influences viewers’ reactions and positing a primacy effect for visual text. However, few studies control for this variable, often using participants’ country of origin as a proxy for subtitling familiarity. For example, Perego et al. (2010) mention that the participants in their subtitling study come from a traditionally dubbing country, Italy, and note their consequently limited experience with subtitling, while Künzli and Ehrensberger-Dow (2011, p. 189) mention that their German-speaking Swiss participants can be assumed to be familiar with subtitles because subtitles are available in Switzerland. In another study, Szarkowska and Gerber-Morón (2018) used country of origin as a proxy of familiarity with subtitling, finding that previous experience with subtitling might affect video processing. Some researchers assess familiarity with subtitling through questions (Bairstow & Lavaur, 2012), though this variable is often overlooked in the discussion. Bisson et al. (2014) suggested that unfamiliarity with subtitling might have influenced the lack of differences in fixation durations across various subtitle tasks (intralingual, standard and reversed subtitling). In his study on interlingual subtitles, Orrego-Carmona (2015) found that participants who rarely use subtitles but depend on them for understanding content spend more time on the subtitle area than those more accustomed to subtitles. Overall, while familiarity with subtitles is frequently mentioned as a potential explanatory variable, little effort has gone into assessing its impact, a deficit that the present study tried to tackle by explicitly asking participants how often they watch subtitled videos and including this variable in all statistical analyses.

Eye-tracking measures of subtitle processing

Duration-based word-level measures

In this study, two duration-based word-level measures are used to investigate reading at the word level: gaze duration (GD) and total time (TT). GD is defined as “the sum of all the fixations made in an interest area until the eyes leave the area” (Godfroid, 2020, p. 221), whilst TT is the sum of all fixation durations within the area of interest (p. 216). These measures reflect different stages of processing: whilst GD is considered an early measure that captures processing during the first reading pass, TT is a late measure that includes regressions and refixations made across all reading passes, and is believed to reflect later semantic integration processes (Clifton et al., 2007). In this study, we aim to determine whether the impact of reading speed is evident already at early processing stages or whether it emerges later, at the stage of semantic integration.

Previous studies that treated speed as a categorical variable (Liao et al., 2021; Szarkowska et al., 2024), found that both GD and TT significantly decreased with higher speeds. Building on this research, we expect that high speed will negatively impact both early and late stages of subtitle reading. Interestingly, Liao et al. (2021) also demonstrated that well-known word-level effects such as word length and frequency tend to diminish at higher subtitle speeds among L1 viewers, a finding confirmed by Szarkowska et al. (2024) for L2 viewers. These linguistic effects were more evident at slower speeds (12 CPS) but less pronounced at faster speeds (20 and 28 CPS). This study examines word length and frequency to verify if these established word properties can affect both early (GD) and late (TT) reading processes. Additionally, drawing from relevant literature comparing L1 and L2 reading (Conklin et al., 2020; Cop et al., 2015), we anticipate that L2 viewers will have longer GD and TT compared to L1 viewers.

Proportional reading time

The primary concern regarding high subtitle speeds is that viewers may not have sufficient time to fully read the subtitles while keeping up with on-screen action. To assess the proportion of time spent by viewers reading subtitles (as opposed to following on-screen action), Koolstra et al. (1999) proposed the metric proportional reading time (PRT). PRT quantifies the percentage of time viewers spend reading subtitles relative to the subtitle’s duration. For example, if a 2-second subtitle is read for 2 seconds, it yields a PRT of 100%, whereas a 4-second subtitle read for 2 seconds results in a PRT of 50%.

Previous research has shown that faster subtitle speeds typically lead to higher PRT (d'Ydewalle et al., 1985; Koolstra et al., 1999; Szarkowska et al., 2021). Some have argued that increasing subtitle speed from 120 to 200 WPM would drastically increase PRT from 40% to 80% (Romero-Fresco, 2015). However, recent research does not confirm this, suggesting that this relationship is more nuanced and influenced by factors like word frequency and concurrent on-screen action (Liao et al., 2021). While PRT provides a valuable measure of attention at the subtitle level, it does not capture cognitive processing at the word level (Kruger et al., 2022, p. 215). Nonetheless, it remains a useful metric that could complement other measures in providing a comprehensive understanding of subtitle processing. While PRT measures attention at the subtitle level, it does not preclude detailed word-level analyses, an approach we adopt here to gain deeper insights into viewer interaction with subtitles. We hypothesise that L2 viewers will spend proportionally longer in the subtitle area compared to L1 viewers.

Word skipping

Skipping words is a natural phenomenon in reading all types of text (Rayner et al., 2016), influenced by factors such as word type (function vs. content), length, frequency, and contextual probability. Short, frequent words are more likely to be skipped than long, low-frequency words (Drieghe et al., 2004; Rayner et al., 2011); an effect that has been confirmed in subtitling, where longer words in subtitles were skipped less than shorter ones (Krejtz et al., 2016). Although short words might be skipped because they can be perceived parafoveally, visual acuity in the parafovea is limited (Rayner, 1998), so most words still have to be fixated to be identified and processed during reading (Reichle, 2021). Consequently, if subtitle speed is too high, viewers may be unable to read subtitles to completion and could end up entirely skipping some words, missing out on the ability to identify them. This could be particularly detrimental for L2 viewers, for whom L2 auditory input alone is typically harder to process. Prior research that looked at word skipping and operationalised speed as a categorical variable found that, at the highest speed, fewer words were fixated and fewer subtitles read to completion, resulting in a diminished ability to “react to anything in the text that trips up reading” (Kruger et al., 2022, p. 19).

In this study, skipping is measured as the percentage of completely skipped words per subtitle. In line with prior research, we expect that higher subtitle speeds will lead to more skipping in both language cohorts, diminishing lexical processing and the ability to identify words, especially in L2 viewers, who would miss out on the ability to visualise spoken words via the subtitles, parse the speech stream and focus attentional control (Gass et al., 2019). Drawing on the skipping findings of Cop et al. (2015) for natural text reading, we also expect L2 viewers to skip fewer words than L1 viewers.

The current study

This study aims to investigate three areas: (1) the impact of subtitle speed on eye movements during intralingual subtitle processing, particularly whether speed, when treated as a continuous variable, significantly influences early or late-stage processing; (2) the differences in how L1-English and L2-English viewers process intralingual English subtitles; and (3) the effects of individual viewer characteristics (age, working memory capacity, education, experience with English subtitles, and familiarity with the video) and subtitle characteristics (word frequency, length, and subtitle duration) on subtitle processing.

Methodology

Participants

A total of 111 participants took part in the study, 46 L1-British English speakers from the UK and 65 L2-English L1-Polish speakers from Poland. Of these, 13 were excluded from the L1 cohort and 15 from the L2 cohort due to poor eye-tracking data quality, failing the working memory test, having dyslexia, or not being native speakers of the languages required by the study. Usable data was analysed from 83 participants: 33 L1 speakers and 50 L2 speakers. Mean age was 27.92 (SD=9.05), ranging from 18 to 52.

There were 21 females, 9 males, and 3 non-binary participants in the L1 cohort and 33 females, 16 males and 1 non-binary participant in the L2 cohort. Polish participants’ English proficiency was measured using two tests: LexTALE (Lemhöfer & Broersma, 2012) and the online Cambridge General English proficiency test2. The mean LexTALE score was 80.2 (SD = 10.55), ranging from 53.75 to 100 (maximum). The mean score for the Cambridge test was 22.78 (SD = 2.41), ranging from 16 to 25 (maximum). The tests show that Polish participants had an average proficiency of C1/C2 according to the Common European Framework of Reference for Languages (CEFR).

Materials

Participants viewed two 6-7-minute excerpts from the American film Don’t Look Up (2021, dir. Adam McKay), depicting two astronomers’ efforts to alert the world to an imminent comet threatening the Earth. The clips were shown with audio and intralingual English subtitles. In total, there were 228 subtitles (99 in clip 1, 129 in clip 2), with an average subtitle speed of 16.25 CPS, ranging from 4 to 27.65 CPS. The minimum duration of a subtitle was 1 second (to avoid having “ghost titles”, see Kruger & Liao (2023)), and the maximum 6 seconds. After watching each clip, participants answered a series of questions assessing their memory recall.

Procedure

Ethical clearance was secured from the ethics committees of each institution where data collection took place (University of Warsaw in Poland and University of East Anglia in the UK). Participants provided informed consent and were tested individually in the lab. All participants completed a demographics questionnaire that included AVT preferences and subtitling experience, operationalised as the frequency of exposure to intralingual English subtitling and measured on a 1-7 self-reported scale, where (1) meant no experience at all, and (7) indicated high familiarity with this task. The questionnaire also asked how many years of formal education participants had attained. Next, L2 participants completed English proficiency tests. After eye-tracking calibration, each participant watched the two video clips, each followed by a recall questionnaire.

Recall was assessed through 30 multiple-choice questions (15 per clip). Participants were asked to recall what exactly was said in a subtitle by choosing one of four options (see supplementary materials). Each question corresponded to a subtitle speed, the hypothesis being that the higher the speed, the lower the recall. Out of a total of 30 recall questions, 16 corresponded to subtitles displayed at a speed of 10-17 CPS, and 14 subtitles at 17-25 CPS. Subtitle speed corresponding to each question was included in the model as a predictor of recall accuracy.

After the eye-tracking part, participants completed a 10 to 15-minute interview (not reported here) and the Reading Span Task (RSPAN) to assess working memory. The test was administered using Inquisit 4 Lab (Millisecond). The traditional absolute RSPAN scoring method was used (see Conway et al. (2005)). After the experiment, participants were given a bookshop voucher as a reward for their time.

Data analysis method

This study employs a mixed design with participants’ language (L1-English and L2-English) as the main between-subject independent variable and subtitle speed as the within-subject variable. The analysis focuses on five dependent variables: proportional reading time (PRT), gaze duration (GD), total time (TT), word skipping, and recall accuracy. Out of the four eye movement metrics, PRT was calculated at the subtitle level (i.e. the Areas of Interest (AOIs) were whole subtitles) whereas GD, TT and skipping at the word level (i.e., the AOIs were individual subtitle words). The key variables of interest – language and speed – and their interactions were included in all models. Several variables were added as covariates and were left in the models if removing them significantly decreased model fit (see Table 2). Full details of the modelling can be found in supplementary materials3.

Results

Table 1 presents descriptive statistics for each of the five dependent variables. As anticipated, L2 viewers exhibited longer gaze durations and total times than L1 viewers. They also spent a greater proportion of time in the subtitle area and had lower recall accuracy. Additionally, L2 viewers skipped fewer subtitle words than their L1 counterparts.

| Dependent measures | Language group | |

L1-English (UK) (SD) |

L2-English (L1-Polish) (SD) |

|

| Gaze duration | 209.3 ms (113) | 221.9 ms (113.8) |

| Total time | 263.2 ms (176.9) | 274.0 ms (171.6) |

| Proportional reading time | 41.11% (20.54) | 48.58% (22.13) |

| Word skipping | 51% (23.8) | 43% (25.0) |

| Recall | 80% (40) | 73.2% (44.3) |

Table 1. Descriptive statistics by language group

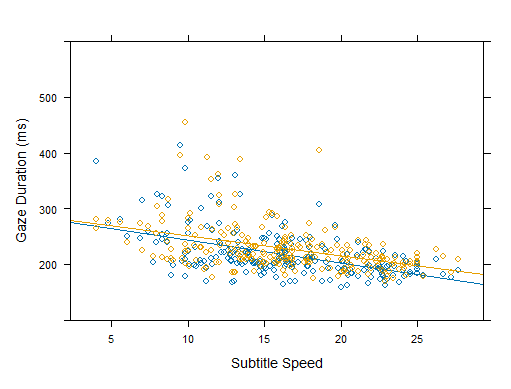

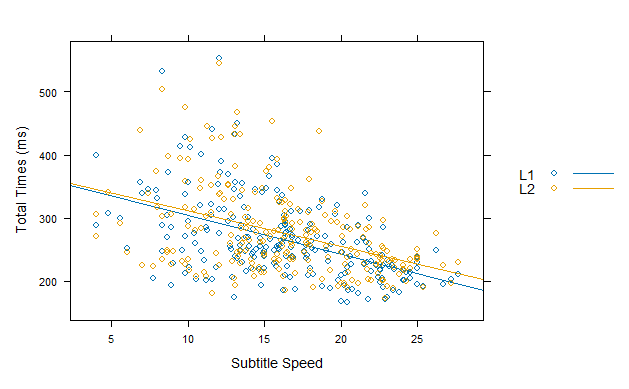

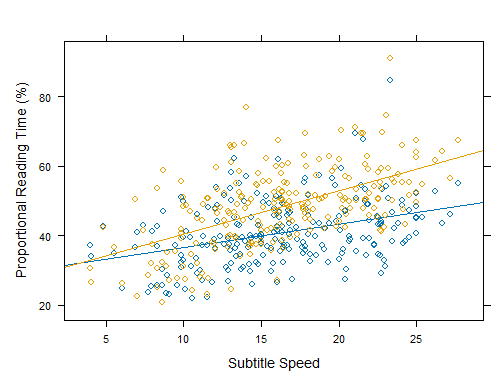

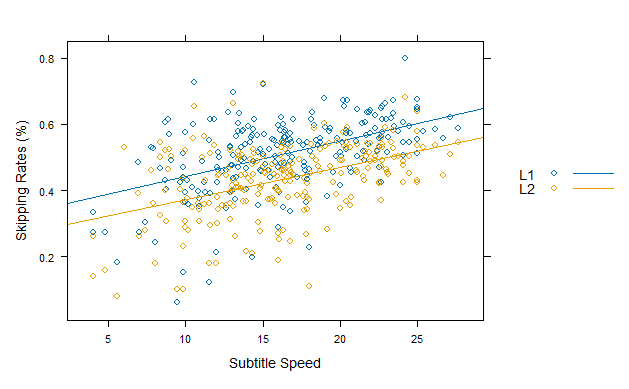

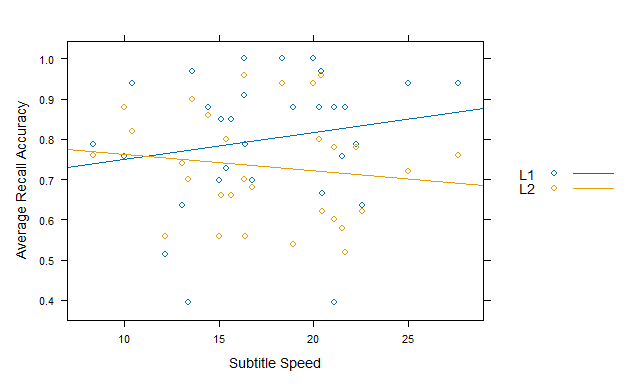

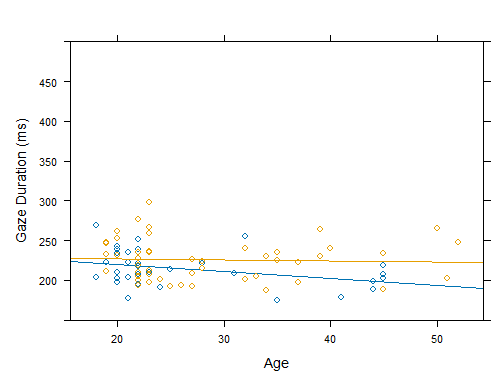

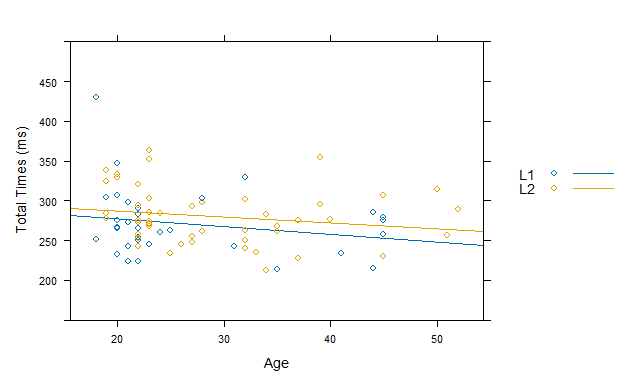

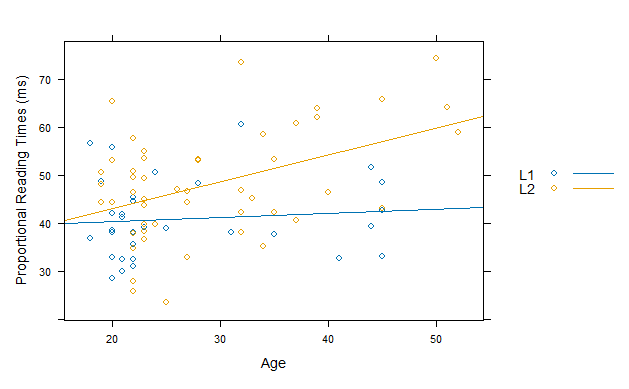

Figures 1-3 below illustrate visually the relationship between the two key predictors (subtitle speed and viewers’ L1) of interest in all dependent measures analysed.

Figures 1a-1b. Gaze duration and total time by subtitle speed and language

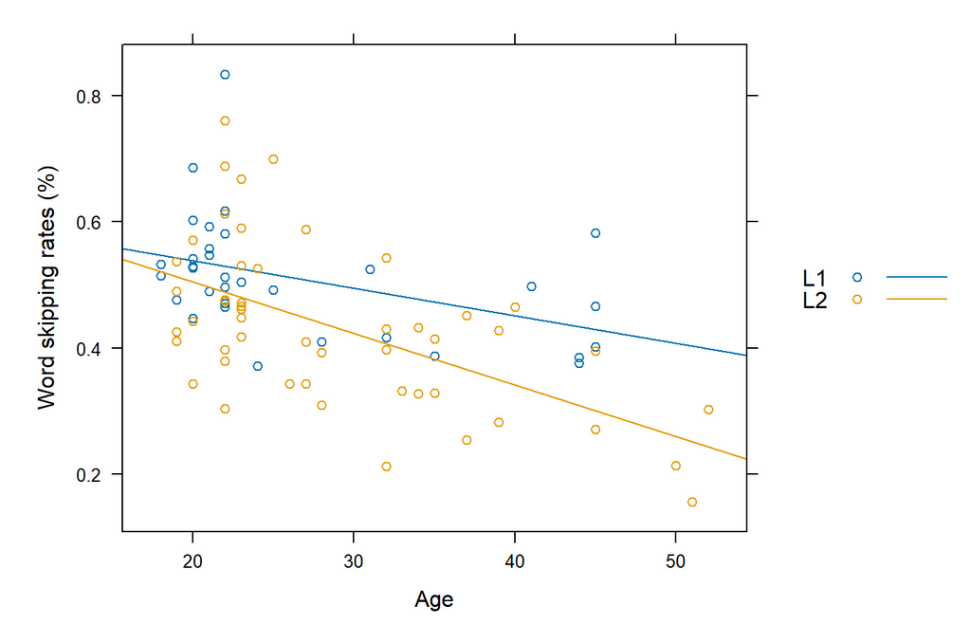

Figures 2a-2b. Proportional reading time and word skipping by subtitle speed and language

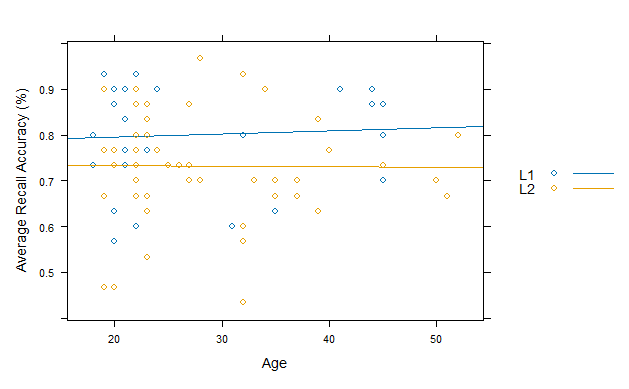

Figure 3. Recall accuracy by subtitle speed and language

Figures 1a-1b illustrate an inverse relationship between subtitle speed and word-level measures: higher speeds triggered shorter gaze durations and total time for both language cohorts. As expected, L2 viewers spent more time reading subtitle words than L1 viewers in both early and late eye movements. In Figure 2, an increase in speed correlates with PRT and increased word skipping for both language groups. However, Figure 2a reveals an interaction effect between speed and language for PRT: at higher speeds, L2 viewers spend statistically significantly more time in the subtitle area than L1 viewers, whereas this difference diminishes at lower speeds. In contrast, Figure 2b shows that L1 viewers consistently skip more words than L2 viewers across all speeds. Moreover, while Table 1 indicates higher average recall accuracy for L1 viewers, Figure 3 demonstrates that at lower speeds (roughly up to 12 CPS), recall rates are comparable between cohorts, with L2 viewers even slightly outperforming L1 viewers in accuracy. However, as speed increases, L2 viewers’ ability to accurately recall exact subtitles declines critically, whereas L1 viewers’ accuracy improves. Table 2 below presents summaries of the final models for all the analyses.

| Measures | Contrasts | β | SE | t/z | p |

| Gaze duration | Intercept | 5.319 | 0.044 | 120.190 | <0.001*** |

| Language [L2] | 0.067 | 0.021 | 3.069 | 0.002** | |

| Speed | -0.009 | 0.000 | -10.690 | <0.001*** | |

| Word Frequency | -0.032 | 0.003 | -9.222 | <0.001*** | |

| Word length | 0.021 | 0.002 | 10.590 | <0.001*** | |

| Number of lines | 0.0566 | 0.007 | 7.131 | <0.001*** | |

| Videoclip [Part 2] | -0.024 | 0.007 | -3.498 | <0.001*** | |

| Age | -0.005 | 0.001 | -2.919 | 0.004** | |

| Experience with English subtitles | -0.019 | 0.007 | -2.675 | 0.009** | |

| Language * Speed | -0.000 | 0.000 | -0.835 | 0.403 | |

| Language * Age | 0.004 | 0.002 | 2.172 | 0.032* | |

Total time |

Intercept | 5.457 | 0.054 | 100.268 | <0.001*** |

| Language [L2] | 0.074 | 0.025 | 2.881 | 0.005 ** | |

| Speed | -0.020 | 0.001 | -16.418 | <0.001*** | |

| Word Frequency | -0.046 | 0.004 | -9.514 | <0.001*** | |

| Word length | 0.038 | 0.002 | 13.181 | <0.001*** | |

| Number of lines | 0.140 | 0.011 | 12.727 | <0.001*** | |

| Videoclip [Part 2] | -0.059 | 0.010 | -5.924 | <0.0001*** | |

| RSPAN | -0.001 | 0.000 | -1.961 | 0.0534 | |

| Seen before [Yes] | -0.048 | 0.024 | -1.963 | 0.0531 | |

| Experience with English subtitles | -0.020 | 0.008 | -2.435 | 0.0171* | |

| Language * Speed | -0.000 | 0.00 | -0.108 | 0.913 | |

| Proportional reading time | Intercept | 53.262 | 4.529 | 11.759 | <0.001*** |

| Language [L2] | 5.046 | 2.198 | 2.295 | 0.0245* | |

| Speed | 0.563 | 0.132 | 4.234 | <0.001*** | |

| Subtitle length | 0.088 | 0.037 | 2.330 | 0.020* | |

| RSPAN | -0.159 | 0.059 | -2.691 | 0.008** | |

| Age | 0.179 | 0.186 | 0.963 | 0.338 | |

| Seen before [Yes] | -3.893 | 1.919 | -2.029 | 0.046* | |

| Experience with English subtitles | -1.979 | 0.692 | -2.856 | 0.005*** | |

| Education | -0.877 | 0.363 | -2.413 | 0.018* | |

| Language * Speed | 0.530 | 0.052 | 10.095 | <0.001*** | |

| Language * Age | 0.543 | 0.220 | 2.470 | 0.015* | |

| Word skipping | Intercept | 0.515 | 0.021 | 23.908 | <0.001*** |

| Language [L2] | -0.056 | 0.021 | -2.669 | 0.009** | |

| Speed | 0.010 | 0.001 | 8.321 | <0.001*** | |

| RSPAN | 0.001 | 0.000 | 3.133 | 0.002** | |

| Age | -0.008 | 0.001 | -6.103 | <0.001** | |

| Seen before [Yes] | 0.044 | 0.001 | 2.120 | 0.037* | |

| Education | 0.007 | 0.003 | 1.861 | 0.066 | |

| Language * Speed | -0.000 | 0.000 | -1.626 | 0.104 | |

Recall accuracy |

Intercept | 1.504 | 0.198 | 7.594 | <0.001*** |

| Language [L2] | -0.050 | 0.166 | -0.301 | 0.762 | |

| Speed | 0.300 | 0.172 | 1.742 | 0.081 | |

| Total time | 0.122 | 0.078 | 1.571 | 0.116 | |

| Reaction time | -0.080 | 0.069 | -11.619 | <0.001*** | |

| Language * Speed | -0.250 | 0.109 | -2.286 | 0.022* |

Table 2. Results of linear mixed effects modelling

Gaze duration and total time

The average gaze duration on a word in the subtitles in the first pass across all conditions was 217 ms and the average total time spent on a word in a subtitle was 270 ms. The word-level analyses showed that, as speed increased, time spent reading decreased, showing statistically significant effects both at the early reading stage (GD) and overall (TT). The results also indicate that L2 viewers spent significantly longer reading subtitle words both at the early and late stages. No statistically significant interaction between speed and language was found.

An interesting and unexpected difference emerged in terms of language and age, which interacted in the early measure (GD) but not in the late one (TT), as shown in Figure 4. Older viewers had shorter gaze duration, but only in the L1 cohort. For the total time, both age and its interaction with language were not statistically significant, did not improve model fit, and were therefore dropped from the final model.

Figures 4a-4b. Gaze duration and total time by language and age

Consistent with previous research, word frequency and length showed statistically significant main effects on early and late eye-tracking measures. Additionally, the number of subtitle lines and the video clip produced statistically significant main effects, indicating that viewers spent more time reading subtitles with two lines, and the first videoclip required more effort to process, with significantly higher GD and TT compared to the second clip. Experience with English subtitles also influenced both measures, as L1 and L2 individuals accustomed to watching subtitled content with intralingual English subtitles exhibited shorter GD and TT. Although working memory and familiarity with the film did not reach statistical significance (p=0.053) in total time spent on subtitles, their exclusion reduced model fit, so they were retained in the final model.

Proportional reading time

The average PRT across all conditions was 45.63%, indicating that viewers spent nearly half of the time reading the subtitles while they were displayed. We observed a main effect of speed, showing that PRT increased with subtitle speed. This suggests that viewers allocated proportionally more time to reading subtitles when they were presented faster, potentially diverting attention from the on-screen action. We also found a statistically significant interaction between speed and language; this means that high speed had a greater effect on L2 viewers than on L1 speakers, who spent longer in the subtitle area.

Figure 5. Proportional reading time by language and age

As for other individual participant characteristics, whilst age alone did not have an effect on PRT, there was a statistically significant interaction between age and language: PRT grew with participants’ age, but only for L2 speakers (see Figure 5). Other statistically significant predictors of PRT were viewers’ working memory capacity and experience with English subtitles: PRT was lower for people with higher RSPANs and for those who were more accustomed to subtitling. Participants who had seen the film before also had a lower PRT compared to those who had not. Unlike in other analyses, education also had a statistically significant effect on PRT, with more years spent studying resulting in lower PRT. Finally, subtitle length also showed a statistically significant main effect, with longer subtitles corresponding to higher PRT (see Table 2).

Word skipping

The mean percentage of words skipped per subtitle was 46%, indicating that participants skipped nearly half of the words in subtitles. Speed influenced word skipping, with higher speeds correlating with increased skipping. A main effect of language was also found: L1 English speakers skipped more words (MUK = 51%) than L2 speakers (MPL = 43%).

Regarding age, Figure 6 shows that younger viewers (around 20 years old) had comparable skipping rates across both language groups, while older L2 viewers (especially those above 40) appeared to read more thoroughly, skipping only around 20% of words compared to L1 viewers who skipped around 40%. Although in the skipping analysis this interaction was not significant and was therefore dropped from the final model, a significant main effect of age was recorded, showing that as age increased, word skipping decreased in both cohorts.

Figure 6. Skipping by language and age

Like in PRT, working memory capacity and whether participants had seen the film before also had a statistically significant effect on skipping: those who had seen the film before tended to skip more subtitles, and so did those with higher working memory capacity. Education was not statically significant (p = 0.066) but dropping it decreased model fit, so it was kept in the final model.

Recall

Despite the fast pace of both the dialogues and the subtitles, and the film’s technical vocabulary on astronomy, both participant groups achieved high recall scores. While recall accuracy was the only analysis where neither speed nor language demonstrated significant main effects, their interaction was nonetheless statistically significant, suggesting a slight decrease in recall accuracy at higher subtitle speeds, but only for Polish participants. In contrast, L1 English speakers were not negatively affected by subtitle speed; in fact, their recall accuracy improved as speed increased (see Figure 3). Model results also showed that age did not have any effect on recall, neither independently nor in interaction with language ( Figure 7).

Figure 7. Recall by language group and age

Interestingly, recall accuracy was not affected by the total time spent reading subtitles or by any other covariates. The only statistically significant predictor of recall accuracy was reaction time, i.e., how long a participant took to respond to the questions. As reaction times increased, accuracy decreased, possibly suggesting that participants dwelled for longer on questions they were not sure of, which they tended to answer incorrectly.

Discussion

This study investigated the impact of subtitle speed on L1-English and L2-English viewers reading intralingual English subtitles. As hypothesised, statistically significant effects of both speed and native language were observed across all eye-tracking measures. Using speed as a continuous variable rather than a categorical factor revealed additional nuances in subtitle processing.

By treating speed as a continuous variable, we could precisely track the effects of increasing subtitle speed. For instance, in our modelling we were able to predict that for every character per second increase in subtitle speed, the time viewers spent reading the subtitles, as measured by PRT, would rise by 0.56%. Therefore, a rise in speed from 10 CPS to 20 CPS would result in a 5.6% increase in PRT. Understanding the proportion of visual attention that viewers allocate to subtitles is useful in various subtitling contexts. For example, in talking-head videos, where following the on-screen action is less critical, higher speeds might be acceptable. However, in genres such as educational content, where important visual information like mathematical formulas is presented on screen, a reduction in speed could be more crucial to enable content understanding.

A key finding of this study is the strong impact of the viewers’ first language on subtitle reading: overall, the effects of increasing subtitle speed were more pronounced in the L2 cohort. On average, L2 viewers took longer to read words at both early and late stages of processing, spent proportionally longer in the subtitle area, and skipped fewer words than L1 speakers. L2 viewers also had lower recall accuracy. These findings collectively suggest that reading in an L1 – including the reading of subtitles – is faster and possibly more efficient than in an L2, which is in line with previous research outside the subtitling context (Conklin et al., 2020; Cop et al., 2015).

One of the goals of this study was to discern the impact of individual participants’ characteristics on how they process subtitled videos. Of the variables considered, four variables showed statistically significant effects on eye movements: age, working memory, experience with English subtitling, and prior familiarity with the video.

Regarding age, in previous studies on reading printed text, it has been observed that older individuals generally read more slowly and have longer fixation durations (Rayner et al., 2012), possibly due to cognitive decline processes (Harada et al., 2013). However, their comprehension tends to remain as good as that of younger readers (Rayner et al., 2012). In our study, we identified a statistically significant main effect of age and its interaction with language, particularly evident in the early stages of subtitle processing measured by gaze duration. In contrast to Rayner (2012), we found that older individuals, particularly L1 speakers, spent less time on words in first-pass subtitle reading compared to younger L1 individuals. One possible reason could be that it took older viewers longer to reach the words in the subtitles, i.e., to move their gaze smoothly between the images and the words. The implication here might be that younger L1 readers are faster at shifting gaze between images and subtitles, ending up having more time to read the words before the subtitles disappear. An alternative explanation for this age difference in L1 reading behaviour in GD could be the higher reading experience that comes with age. Interestingly, age did not influence the early stages of subtitle processing among L2 speakers. This may be tentatively taken to suggest that the early cognitive processes involved in word identification and lexical access remain the same for younger and older L2 viewers alike. However, given the limited number of older participants in our sample, these findings should be interpreted with caution. Further research with a more age-diverse population is needed to better understand how age may interact with language background in shaping subtitle processing. The finding that age effects are significant in early but not late reading measures, primarily for the L1 cohort, is complemented by a lower rate of word skipping among older participants, who tended to skip fewer words compared to younger ones in both language groups. This trend was particularly noticeable among older L2 speakers, who skipped fewer words than their L1 counterparts, although this interaction was not statistically significant. Furthermore, as indicated by PRT, older Polish participants spent a greater proportion of time reading subtitles compared to younger Polish participants. This age-related effect was not observed among English participants. We hypothesise that this difference could be attributed to the viewing habits formed by Polish participants during their youth. In their formative years, older Polish participants were exposed to voice-over before the rise of digital television and streaming platforms. Younger Polish participants, on the contrary, might have developed different audiovisual consumption habits through exposure to more alternatives and the opportunity to make informed decisions about when, how and how often to watch subtitled content. Descriptive statistics show that participants who preferred voice-over (a minority) had a higher mean PRT (M = 52.53%) compared to those who preferred subtitling (M = 45.79%), possibly suggesting that early exposure to different AVT methods may contribute to increase the time older Polish participants spent reading subtitles.

We also observed that working memory capacity significantly influenced subtitle processing. In line with Gass et al. (2019), participants with higher RSPAN scores generally spent less time on subtitles, as indicated by significant reductions in PRT and TT (but not GD), and skipped more words. Contrary to our hypothesis, however, working memory capacity did not have a statistically significant effect on participants’ ability to recall information. This outcome could be attributed to the auditory component: since participants could hear and understand the spoken dialogues, they did not rely solely on the subtitles for comprehension and information integration, but used them as supplementary information aids.

Our results stress the need to assess watching habits and therefore previous experience with the task (here: reading intralingual English subtitles) rather than assuming that populations from a given country are used to a specific translation modality. With independent and evolving audiences, country-level preferences do not seem to represent the reality of viewers. Similarly to Orrego-Carmona (2015), participants who declared using subtitles more often had shorter GD and TT, and consequently a lower PRT, exhibiting more efficient reading.

Considering the impact of familiarity with the content on PRT and word skipping, our results suggest that having previously seen a film can affect the viewing process. Future subtitle processing studies should therefore either make sure the experimental materials chosen are not already known to the participants, or explicitly ask whether participants are familiar with the film/series used and include this factor in their analyses.

In addition to the eye-tracking measures, we were interested in the effect of speed and language on participants’ ability to recall information. Contrary to our hypothesis that high subtitle speeds would negatively affect all participants’ ability to recall words and phrases from the subtitles, higher speeds had a negative effect only for L2 viewers. It is important to note, however, that recall accuracy was generally high, even for L2 speakers, ranging from around 80% at slower speeds to around 70% at higher speeds. Since the L2 viewers in this study were affected despite their high proficiency, it is possible that negative effects of speed would be even more pronounced among viewers with lower L2 proficiency. Memory is a key component of human cognition and learning (Ellis, 2001), and although the present study was not designed to test L2 learning, the recall findings indirectly suggest that excessive speeds may actually nullify the much-touted benefits of same-language subtitles in foreign-language learning contexts at earlier stages of learning, a hypothesis that deserves further investigation given the documented rise in subtitle speeds (Szarkowska, 2016a, 2016b).

Conclusions, limitations and future research

This study demonstrated that actual subtitle speed – measured as a continuous variable that changes for each subtitle rather than as a categorical factor that stays the same for all subtitles in a given video – significantly affects eye movements during subtitle reading. Moreover, the impact is more pronounced for L2 viewers, who, despite their high proficiency, are more sensitive to faster subtitles than L1 viewers. Collectively, the findings highlight the challenges posed by the widespread use of fast intralingual English subtitles in audiovisual content for L2-English speakers.

In terms of limitations, similar to previous research on skipping (Kruger et al., 2022), our word skipping analysis did not differentiate between function words and content words. However, it is crucial to consider word type to accurately evaluate skipping behaviour in subtitle reading, given that short function words (e.g., articles, prepositions, conjunctions) are often skipped regardless of subtitle speed. Future studies should distinguish between overall word skipping and skipping of content words specifically. Addressing this limitation would result in a more nuanced understanding of speed effects on subtitle reading dynamics.

The study also explored how a number of individual viewer characteristics affect reading, providing evidence that age, working memory capacity, experience with subtitles and with the video significantly influence engagement with subtitled audiovisual content. These findings reveal audience differences and call for closer analyses of participant-level variables in future research on subtitling.

References

Baddeley, A. D. (2007). Working Memory, Thought, and Action. Oxford University Press.

Bairstow, D., & Lavaur, J. M. (2012). Audiovisual Information Processing by Monolinguals and Bilinguals: Effects of Intralingual and Interlingual Subtitles. In A. Remael, P. Orero, & M. Carroll (Eds.), Audiovisual Translation and Media Accessibility at the Crossroads (pp. 273–293). Rodopi.

Bisson, M.-J., Van Heuven, W. J. B., Conklin, K., & Tunney, R. J. (2014). Processing of native and foreign language subtitles in films: An eye tracking study. Applied Psycholinguistics, 35(02), 399-418. https://doi.org/10.1017/s0142716412000434

Clifton, C., Staub, A., & Rayner, K. (2007). Eye movements in reading words and sentences. In R. P. G. Van Gompel, M. H. Fischer, W. S. Murray, & R. L. Hill (Eds.), Eye Movements. A Window on Mind and Brain (pp. 341-371). Elsevier. https://doi.org/10.1016/B978-008044980-7/50017-3

Conklin, K., Alotaibi, S., Pellicer-Sánchez, A., & Vilkaitė-Lozdienė, L. (2020). What eye-tracking tells us about reading-only and reading-while-listening in a first and second language. Second Language Research, 36(3), 257-276. https://doi.org/10.1177/0267658320921496

Conway, A. R. A., Kane, M. J., Bunting, M. F., Hambrick, D. Z., Wilhelm, O., & Engle, R. W. (2005). Working memory span tasks: A methodological review and user’s guide. Psychonomic Bulletin & Review, 12(5), 769-786. https://doi.org/10.3758/BF03196772

Cop, U., Dirix, N., Van Assche, E. V. A., Drieghe, D., & Duyck, W. (2017). Reading a book in one or two languages? An eye movement study of cognate facilitation in L1 and L2 reading. Bilingualism, 20(4), 747-769. https://doi.org/10.1017/S1366728916000213

Cop, U., Drieghe, D., & Duyck, W. (2015). Eye Movement Patterns in Natural Reading: A Comparison of Monolingual and Bilingual Reading of a Novel. PLoS ONE, 10(8), e0134008. https://doi.org/10.1371/journal.pone.0134008

d'Ydewalle, G., & De Bruycker, W. (2007). Eye movements of children and adults while reading television subtitles. European Psychologist, 12(3), 196-205. https://doi.org/10.1027/1016-9040.12.3.196

d'Ydewalle, G., Muylle, P., & Rensbergen, J. v. (1985). Attention shifts in partially redundant information situations. In R. Groner, G. W. McConkie, & C. Menz (Eds.), Eye Movements and Human Information Processing (pp. 375-384). Elsevier.

d'Ydewalle, G., Praet, C., Verfaillie, K., & Van Rensbergen, J. (1991). Watching subtitled television: automatic reading behavior. Communication Research, 18(5), 650-666.

d'Ydewalle, G., Van Rensbergen, J., & Pollet, J. (1987). Reading a message when the same message is available auditorily in another language: the case of subtitling. In J. K. O'Regan & A. Levy-Schoen (Eds.), Eye Movements: from Physiology to Cognition (pp. 313-321). Elsevier.

Danan, M. (2016). Enhancing listening with captions and transcripts: Exploring learner differences. Applied Language Learning, 26(2), 1-24.

Díaz-Cintas, J., & Remael, A. (2021). Subtitling. Concepts and Practices. Routledge.

Drieghe, D., Brysbaert, M., Desmet, T., & De Baecke, C. (2004). Word skipping in reading: On the interplay of linguistic and visual factors. European Journal of Cognitive Psychology, 16(1-2), 79-103. https://doi.org/10.1080/09541440340000141

Ellis, N. C. (2001). Memory for language. In P. Robinson (Ed.), Cognition and Second Language Instruction (pp. 33-68). Cambridge University Press. https://doi.org/10.1017/CBO9781139524780.004

Gass, S., Winke, P., Isbell, D. R., & Ahn, J. (2019). How captions help people learn languages: A working-memory, eye-tracking study. Language Learning & Technology, 23(2), 84-104.

Godfroid, A. (2020). Eye tracking in second language acquisition and bilingualism: a research synthesis and methodological guide. Routledge.

Greenwood, G. (2023, 2023/02/27/). Younger TV viewers prefer to watch with subtitles on. The Times, 18. https://www.thetimes.com/culture/tv-radio/article/younger-tv-viewers-prefer-to-watch-with-subtitles-on-bbbcthft8

Hagström, H., & Pedersen, J. (2022). Subtitles in the 2020s: The Influence of Machine Translation. Journal of Audiovisual Translation, 5(1), 207-225. https://doi.org/10.47476/jat.v5i1.2022

Harada, C. N., Natelson Love, M. C., & Triebel, K. L. (2013). Normal cognitive aging. Clinics in Geriatric Medicine, 29(4), 737-752. https://doi.org/10.1016/j.cger.2013.07.002

Kliegl, R., Grabner, E., Rolfs, M., & Engbert, R. (2004). Length, frequency, and predictability effects of words on eye movements in reading. European Journal of Cognitive Psychology, 16(1-2), 262-284. https://doi.org/10.1080/09541440340000213

Koolstra, C. M., Van Der Voort, T. H. A., & d'Ydewalle, G. (1999). Lengthening the presentation time of subtitles on television: effects on children’s reading time and recognition. Communications, 24(4), 407-422. https://doi.org/10.1515/comm.1999.24.4.407

Krejtz, I., Szarkowska, A., & Łogińska, M. (2016). Reading function and content words in subtitled videos. Journal of Deaf Studies and Deaf Education, 21(2), 222-232. https://doi.org/10.1093/deafed/env061

Kruger, J.-L., Wisniewska, N., & Liao, S. (2022). Why subtitle speed matters: Evidence from word skipping and rereading. Applied Psycholinguistics, 43, 211–236. https://doi.org/10.1017/s0142716421000503

Kruger, J. L., & Liao, S. (2023, 6-7 July 2023). Busting ghost titles on streaming services. Media for All 10 Conference, Antwerp.

Künzli, A., & Ehrensberger-Dow, M. (2011). Innovative subtitling: A reception study. In C. Alvstad, A. Hild, & E. Tiselius (Eds.), Methods and Strategies of Process Research: Integrative Approaches in Translation Studies (Vol. 94, pp. 187-200). https://doi.org/10.1075/btl.94.14kun

Lång, J., Vrzakova, H., & Mehtätalo, L. (2021). Modelling Gaze Behaviour in Subtitle Processing: The Effect of Structural and Lexical Properties. Journal of Audiovisual Translation, 4(1), 71-95. https://doi.org/10.47476/jat.v4i1.2021

Lee, M., & Révész, A. (2020). Promoting grammatical development through captions and textual enhancement in multimodal input-based tasks. Studies in Second Language Acquisition, 42(3), 625-651. https://doi.org/10.1017/S0272263120000108

Lemhöfer, K., & Broersma, M. (2012). Introducing LexTALE: A quick and valid Lexical Test for Advanced Learners of English. Behavior Research Methods, 44(2), 325-343. https://doi.org/10.3758/s13428-011-0146-0

Liao, S., Yu, L., Kruger, J.-L., & Reichle, E. D. (2022). The impact of audio on the reading of intralingual versus interlingual subtitles: Evidence from eye movements. Applied Psycholinguistics, 41(1), 237 - 269. https://doi.org/10.1017/s0142716421000527

Liao, S., Yu, L., Reichle, E. D., & Kruger, J.-L. (2021). Using Eye Movements to Study the Reading of Subtitles in Video. Scientific Studies of Reading, 25(5), 417-435. https://doi.org/10.1080/10888438.2020.1823986

Meteyard, L., & Davies, R. A. I. (2020). Best practice guidance for linear mixed-effects models in psychological science. Journal of Memory and Language, 112. https://doi.org/10.1016/j.jml.2020.104092

Mitterer, H., & McQueen, J. M. (2009). Foreign subtitles help but native-language subtitles harm foreign speech perception. PLoS ONE, 4(11), e7785. https://doi.org/10.1371/journal.pone.0007785

Montero Perez, M. (2022). Second or foreign language learning through watching audio-visual input and the role of on-screen text. Language Teaching, 55(2), 163-192. https://doi.org/10.1017/s0261444821000501

Muñoz, C. (2017). The role of age and proficiency in subtitle reading. An eye-tracking study. System, 67, 77-86. https://doi.org/10.1016/j.system.2017.04.015

Netflix. (2020). Timed Text Style Guide: Subtitle Timing Guidelines. https://partnerhelp.netflixstudios.com/hc/en-us/articles/360051554394-Timed-Text-Style-Guide-Subtitle-Timing-Guidelines

Netflix. (2021). English Timed Text Style Guide. https://partnerhelp.netflixstudios.com/hc/en-us/articles/217350977-English-Timed-Text-Style-Guide

Ofcom. (2024). Ensuring the quality of TV and on-demand services. Statement on changes to the TV Access Services Code and updates to Ofcom’s best practice guidelines on access services. https://www.ofcom.org.uk/__data/assets/pdf_file/0032/282668/Statement-on-Ensuring-the-quality-of-TV-and-on-demand-services.pdf

Orrego-Carmona, D. (2015). The Reception of (Non)Professional Subtitling. Universitat Rovira i Virgili. Tarragona.

Pedersen, J. (2018). From old tricks to Netflix: How local are interlingual subtitling norms for streamed television? Journal of Audiovisual Translation, 1(1), 81-100.

Perego, E., Del Missier, F., & Bottiroli, S. (2015). Dubbing versus subtitling in young and older adults: cognitive and evaluative aspects. Perspectives, 23(1), 1-21. https://doi.org/10.1080/0907676x.2014.912343

Perego, E., Del Missier, F., Porta, M., & Mosconi, M. (2010). The cognitive effectiveness of subtitle processing. Media Psychology, 13(3), 243-272. https://doi.org/10.1080/15213269.2010.502873

Rayner, K. (1998). Eye Movements in Reading and Information Processing: 20 Years of Research. Psychological Bulletin, 124(3), 372-422. https://doi.org/10.1037/0033-2909.124.3.372

Rayner, K., Pollatsek, A., Ashby, J., & Clifton, C. J. (2012). Psychology of Reading (2 ed.). Psychology Press.

Rayner, K., Schotter, E. R., Masson, M. E., Potter, M. C., & Treiman, R. (2016). So Much to Read, So Little Time: How Do We Read, and Can Speed Reading Help? Psychological Science in the Public Interest, 17(1), 4-34. https://doi.org/10.1177/1529100615623267

Rayner, K., Slattery, T. J., Drieghe, D., & Liversedge, S. P. (2011). Eye movements and word skipping during reading: effects of word length and predictability. Journal of Experimental Psychology: Human Perception and Performance, 37(2), 514-528. https://doi.org/10.1037/a0020990

Reichle, E. D. (2021). Computational Models of Reading: A Handbook. Oxford University Press. https://doi.org/10.1093/oso/9780195370669.001.0001

Romero-Fresco, P. (2015). Final thoughts: Viewing speed in subtitling. In P. Romero-Fresco (Ed.), The Reception of Subtitles for the Deaf and Hard of Hearing in Europe (pp. 335-341). Peter Lang.

Schroeder, S., Hyönä, J., & Liversedge, S. P. (2015). Developmental eye-tracking research in reading: Introduction to the special issue. Journal of Cognitive Psychology, 27(5), 500-510. https://doi.org/10.1080/20445911.2015.1046877

Silva, B., Orrego-Carmona, D., & Szarkowska, A. (2022). Using linear mixed models to analyze data from eye-tracking research on subtitling. Translation Spaces, 11(1), 60-88. https://doi.org/10.1075/ts.21013.sil

Szarkowska, A. (2016a). Report on the results of an online survey on subtitle presentation times and line breaks in interlingual subtitling. Part 1: Subtitlers. http://avt.ils.uw.edu.pl/files/2016/10/SURE_Report_Survey1.pdf

Szarkowska, A. (2016b). Report on the results of an online survey on subtitle presentation times and line breaks in interlingual subtitling. Part 2: Subtitling companies. http://avt.ils.uw.edu.pl/files/2016/12/Report_Survey2_SURE.pdf

Szarkowska, A., & Gerber-Morón, O. (2018). Viewers can keep up with fast subtitles: Evidence from eye movements. PLoS ONE, 13(6). https://doi.org/10.1371/journal.pone.0199331

Szarkowska, A., Ragni, V., Szkriba, S., Black, S., Orrego-Carmona, D., & Kruger, J.-L. (2024). Watching subtitled videos with the sound off affects viewers’ comprehension, cognitive load, immersion, enjoyment, and gaze patterns: A mixed-methods eye-tracking study. PLoS ONE, 19(10), https://doi.org/10.1371/journal.pone.0306251

Szarkowska, A., Ragni, V., Szkriba, S., Orrego-Carmona, D., Black, S., Kruger, J.-L., & Krejtz, K. (2024). The impact of video and subtitle speed on subtitle reading: an eye-tracking replication study. Journal of Audiovisual Translation, 7, 1-13.

Szarkowska, A., Silva, B., & Orrego-Carmona, D. (2021). Effects of subtitle speed on proportional reading time. Translation, Cognition & Behavior, 4(2), 305-330. https://doi.org/10.1075/tcb.00057.sza

Winke, P., Gass, S., & Sydorenko, T. (2010). The Effects of Captioning Videos Used for Foreign Language Listening Activities. Language Learning & Technology, 14(1), 65-86.

Winke, P., Gass, S., & Sydorenko, T. (2013). Factors Influencing the Use of Captions by Foreign Language Learners: An Eye-Tracking Study. The Modern Language Journal, 97(1), 254-275.

Wisniewska, N., & Mora, J. C. (2020). Can Captioned Video Benefit Second Language Pronunciation? Studies in Second Language Acquisition, 42(3), 599-624. https://doi.org/10.1017/s0272263120000029

Data Availability Statement

The data that support the findings of this study together with other supplementary materials are openly available in the OSF data repository at https://osf.io/yfzce/

Funding

This study was conducted as part of the Watch Me Project funded by the National Science Centre, Poland, OPUS 19 Programme 2020/37/B/HS2/00304.

*ORCID: 0000-0002-0048-993X; e-mail: a.szarkowska@uw.edu.pl

**ORCID: 0000-0002-4690-9354; e-mail: v.ragni@uw.edu.pl

***ORCID: 0000-0003-4229-592X; e-mail: s.szkriba@uw.edu.pl

****ORCID: 0000-0001-6459-1813; e-mail: david.orrego-carmona@warwick.ac.uk

*****ORCID: 0000-0001-8123-4383; e-mail: sharon.black@uea.ac.uk↩︎Notes

https://www.cambridgeenglish.org/test-your-english/general-english/↩︎